prometheus监控k8s集群

- 具体版本

Prometheus:v2.2.1

kubernetes:v1.18.9

Grafana:latest

alertmanager:v0.14.0

metrics:v1.3.0

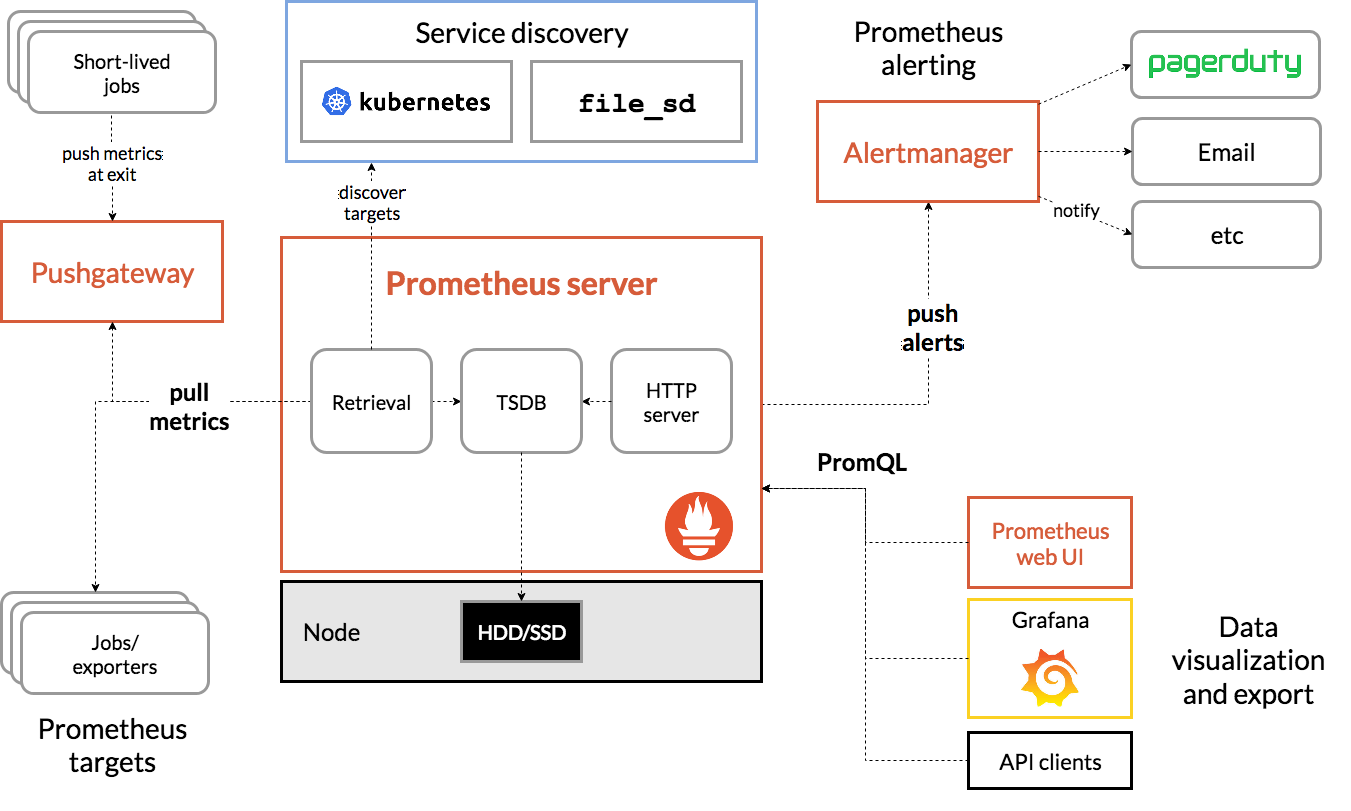

Prometheus是继Kubernetes项目以后CNCF基金会第二个托管项目,最初建于SoundClound,是一个开源的系统监控和警报工具包,独立于任何公司的一个独立的开源项目

详细介绍请查看官方文档:https://prometheus.io/docs/introduction/overview/

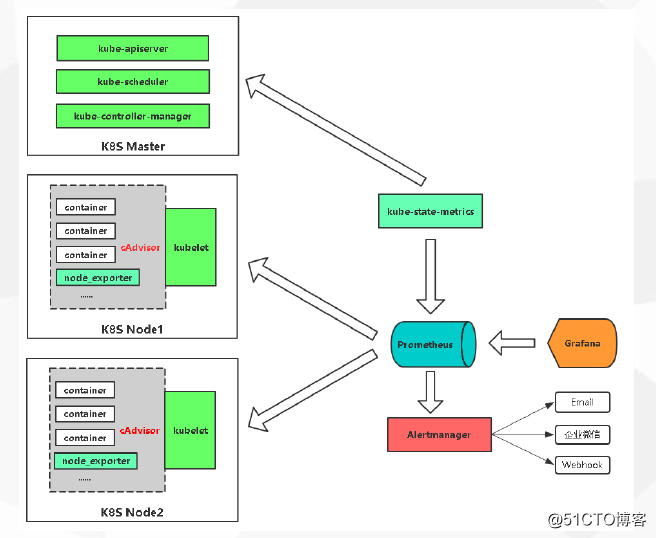

实现思路:

各node_exporter收集的信息Push到Prometheus,集群部署prometheus进行服务发现和采集信息,然后经过Prometheus进行处理后,Grafana进行效果展示,Altermanager进行报警管理

prometheus监控k8s架构

prometheus采用的是StatefulSet有状态部署,我们需要提前搭建好NFS存储,采用动态PV的存储给prometheus;

官方文档地址:https://prometheus.io/docs/prometheus/latest/configuration/configuration/#kubernetes_sd_config

官方部署文档:

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/prometheus

https://grafana.com/grafana/download

每个node节点都执行

yum install nfs-common nfs-utils -y

promethues-rbac.yaml

[root@master prometheus-k8s]# cat prometheus-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- "/metrics"

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

[root@master1 prometheus]# kubectl apply -f prometheus-rbac.yaml

prometheus-configmap.yaml

root@master prometheus-k8s]# cat prometheus-configmap.yaml

# Prometheus configuration format https://prometheus.io/docs/prometheus/latest/configuration/configuration/

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

prometheus.yml: |

rule_files:

- /etc/config/rules/*.rules

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- localhost:9090

- job_name: kubernetes-nodes

scrape_interval: 30s

static_configs:

- targets:

- 172.16.75.2:9100

- 172.16.75.3:9100

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-kubelet

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __metrics_path__

replacement: /metrics/cadvisor

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-services

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module:

- http_2xx

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_probe

- source_labels:

- __address__

target_label: __param_target

- replacement: blackbox

target_label: __address__

- source_labels:

- __param_target

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:80"]

[root@master1 prometheus]# kubectl apply -f prometheus-configmap.yaml

prometheus-rules.yaml

[root@master prometheus-k8s]# cat prometheus-rules.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: kube-system

data:

general.rules: |

groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: error

annotations:

summary: "Instance {{ $labels.instance }} 停止工作"

description: "{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上."

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: 100 - (node_filesystem_free_bytes{fstype=~"ext4|xfs"} / node_filesystem_size_bytes{fstype=~"ext4|xfs"} * 100) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高"

description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})"

- alert: NodeCPUUsage

expr: 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})"

[root@master1 prometheus]# kubectl apply -f prometheus-rules.yaml

[root@master1 opt]# kubectl get sc -A -o wide

NAME PROVISIONER AGE

managed-nfs-storage fuseim.pri/ifs 6d

prometheus-statefulset.yaml

[root@master prometheus-k8s]# cat prometheus-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: prometheus

namespace: kube-system

labels:

k8s-app: prometheus

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v2.2.1

spec:

serviceName: "prometheus"

replicas: 1

podManagementPolicy: "Parallel"

updateStrategy:

type: "RollingUpdate"

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

serviceAccountName: prometheus

initContainers:

- name: "init-chown-data"

image: "busybox:latest"

imagePullPolicy: "IfNotPresent"

command: ["chown", "-R", "65534:65534", "/data"]

volumeMounts:

- name: prometheus-data

mountPath: /data

subPath: ""

containers:

- name: prometheus-server-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9090/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

- name: prometheus-server

image: "prom/prometheus:v2.2.1"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/prometheus.yml

- --storage.tsdb.path=/data

- --web.console.libraries=/etc/prometheus/console_libraries

- --web.console.templates=/etc/prometheus/consoles

- --web.enable-lifecycle

ports:

- containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

# based on 10 running nodes with 30 pods each

resources:

limits:

cpu: 200m

memory: 1000Mi

requests:

cpu: 200m

memory: 1000Mi

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

subPath: ""

- name: prometheus-rules

mountPath: /etc/config/rules

terminationGracePeriodSeconds: 300

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-rules

configMap:

name: prometheus-rules

volumeClaimTemplates:

- metadata:

name: prometheus-data

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "16Gi"

[root@master1 prometheus]# kubectl apply -f prometheus-statefulset.yaml

[root@master1 prometheus]# kubectl get sts -A

NAMESPACE NAME READY AGE

kube-system prometheus 0/1 14s

[root@master1 prometheus]# kubectl get sts -A -o wide

NAMESPACE NAME READY AGE CONTAINERS IMAGES

kube-system prometheus 0/1 25s prometheus-server-configmap-reload,prometheus-server jimmidyson/configmap-reload:v0.1,prom/prometheus:v2.2.1

prometheus-service.yaml

[root@master prometheus-k8s]# cat prometheus-service.yaml

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/name: "Prometheus"

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

nodePort: 30090

selector:

k8s-app: prometheus

[root@master1 prometheus]# kubectl apply -f prometheus-service.yaml

service/prometheus created

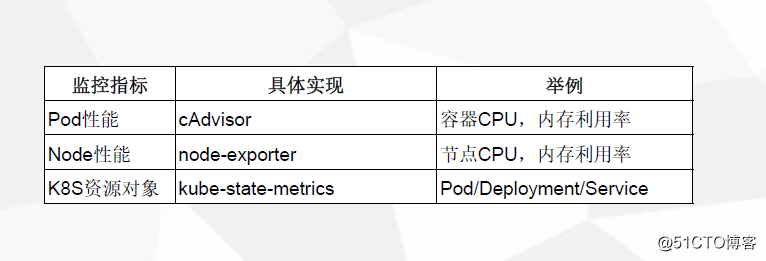

监控Pod性能,通过cADvisor监控,暴露的metrics的10250端口

[root@master prometheus-k8s]# for i in

ls kube-state-metrics-*; do ls $i && cat $i ; done kube-state-metrics-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: kube-state-metrics namespace: kube-system labels: k8s-app: kube-state-metrics kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v1.3.0 spec: selector: matchLabels: k8s-app: kube-state-metrics version: v1.3.0 replicas: 1 template: metadata: labels: k8s-app: kube-state-metrics version: v1.3.0 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-cluster-critical serviceAccountName: kube-state-metrics containers: - name: kube-state-metrics image: lizhenliang/kube-state-metrics:v1.3.0 ports: - name: http-metrics containerPort: 8080 - name: telemetry containerPort: 8081 readinessProbe: httpGet: path: /healthz port: 8080 initialDelaySeconds: 5 timeoutSeconds: 5 - name: addon-resizer image: lizhenliang/addon-resizer:1.8.3 resources: limits: cpu: 100m memory: 30Mi requests: cpu: 100m memory: 30Mi env: - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: MY_POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: config-volume mountPath: /etc/config command: - /pod_nanny - --config-dir=/etc/config - --container=kube-state-metrics - --cpu=100m - --extra-cpu=1m - --memory=100Mi - --extra-memory=2Mi - --threshold=5 - --deployment=kube-state-metrics volumes: - name: config-volume configMap: name: kube-state-metrics-config

Config map for resource configuration.

apiVersion: v1 kind: ConfigMap metadata: name: kube-state-metrics-config namespace: kube-system labels: k8s-app: kube-state-metrics kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile data: NannyConfiguration: |- apiVersion: nannyconfig/v1alpha1 kind: NannyConfiguration kube-state-metrics-rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: kube-state-metrics namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: kube-state-metrics labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints verbs: ["list", "watch"]

- apiGroups: ["extensions"]

resources:

- daemonsets

- deployments

- replicasets verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers verbs: ["list", "watch"]

apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: kube-state-metrics-resizer namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules:

- apiGroups: [""]

resources:

- pods verbs: ["get"]

- apiGroups: ["extensions"]

resources:

- deployments resourceNames: ["kube-state-metrics"] verbs: ["get", "update"]

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kube-state-metrics labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kube-state-metrics subjects:

- kind: ServiceAccount name: kube-state-metrics namespace: kube-system

apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: kube-state-metrics namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kube-state-metrics-resizer subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

kube-state-metrics-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "kube-state-metrics"

annotations:

prometheus.io/scrape: 'true'

spec:

ports:

- name: http-metrics port: 8080 targetPort: http-metrics protocol: TCP

- name: telemetry port: 8081 targetPort: telemetry protocol: TCP selector: k8s-app: kube-state-metrics

- apiGroups: [""]

resources:

grafana.yaml

[root@master prometheus-k8s]# cat grafana.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: grafana

namespace: kube-system

spec:

serviceName: "grafana"

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana

ports:

- containerPort: 3000

protocol: TCP

resources:

limits:

cpu: 100m

memory: 256Mi

requests:

cpu: 100m

memory: 256Mi

volumeMounts:

- name: grafana-data

mountPath: /var/lib/grafana

subPath: grafana

securityContext:

fsGroup: 472

runAsUser: 472

volumeClaimTemplates:

- metadata:

name: grafana-data

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "1Gi"

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

spec:

type: NodePort

ports:

- port : 80

targetPort: 3000

nodePort: 30007

selector:

app: grafana

[root@master1 prometheus]# kubectl apply -f grafana.yaml

statefulset.apps/grafana created

service/grafana created

[root@master1 prometheus]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana NodePort 10.0.0.120 <none> 80:30007/TCP 4s

kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP 15d

kuboard NodePort 10.0.0.174 <none> 80:32567/TCP 15d

metrics-server ClusterIP 10.0.0.52 <none> 443/TCP 15d

prometheus NodePort 10.0.0.86 <none> 9090:30090/TCP 22m

AlterManager报警

[root@master prometheus-k8s]# for i in `ls alertmanager-*`; do ls $i && cat $i ; done

alertmanager-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.163.com:25'

smtp_from: 'ljy_153@163.com'

smtp_auth_username: 'ljy_153@163.com'

smtp_auth_password: '************'

receivers:

- name: default-receiver

email_configs:

- to: "ljy_153@163.com"

route:

group_interval: 1m

group_wait: 10s

receiver: default-receiver

repeat_interval: 1m

alertmanager-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-system

labels:

k8s-app: alertmanager

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v0.14.0

spec:

replicas: 1

selector:

matchLabels:

k8s-app: alertmanager

version: v0.14.0

template:

metadata:

labels:

k8s-app: alertmanager

version: v0.14.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: prometheus-alertmanager

image: "prom/alertmanager:v0.14.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/alertmanager.yml

- --storage.path=/data

- --web.external-url=/

ports:

- containerPort: 9093

readinessProbe:

httpGet:

path: /#/status

port: 9093

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: storage-volume

mountPath: "/data"

subPath: ""

resources:

limits:

cpu: 10m

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

- name: prometheus-alertmanager-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9093/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: storage-volume

persistentVolumeClaim:

claimName: alertmanager

alertmanager-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "2Gi"

alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Alertmanager"

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 9093

selector:

k8s-app: alertmanager

type: "ClusterIP"

[root@master1 prometheus]# kubectl apply -f alertmanager-deployment.yaml

deployment.apps/alertmanager created

[root@master1 prometheus]# kubectl apply -f alertmanager-configmap.yaml

configmap/alertmanager-config created

[root@master1 prometheus]# kubectl apply -f alertmanager-pvc.yaml

persistentvolumeclaim/alertmanager created

[root@master1 prometheus]# kubectl apply -f alertmanager-service.yaml

service/alertmanager created

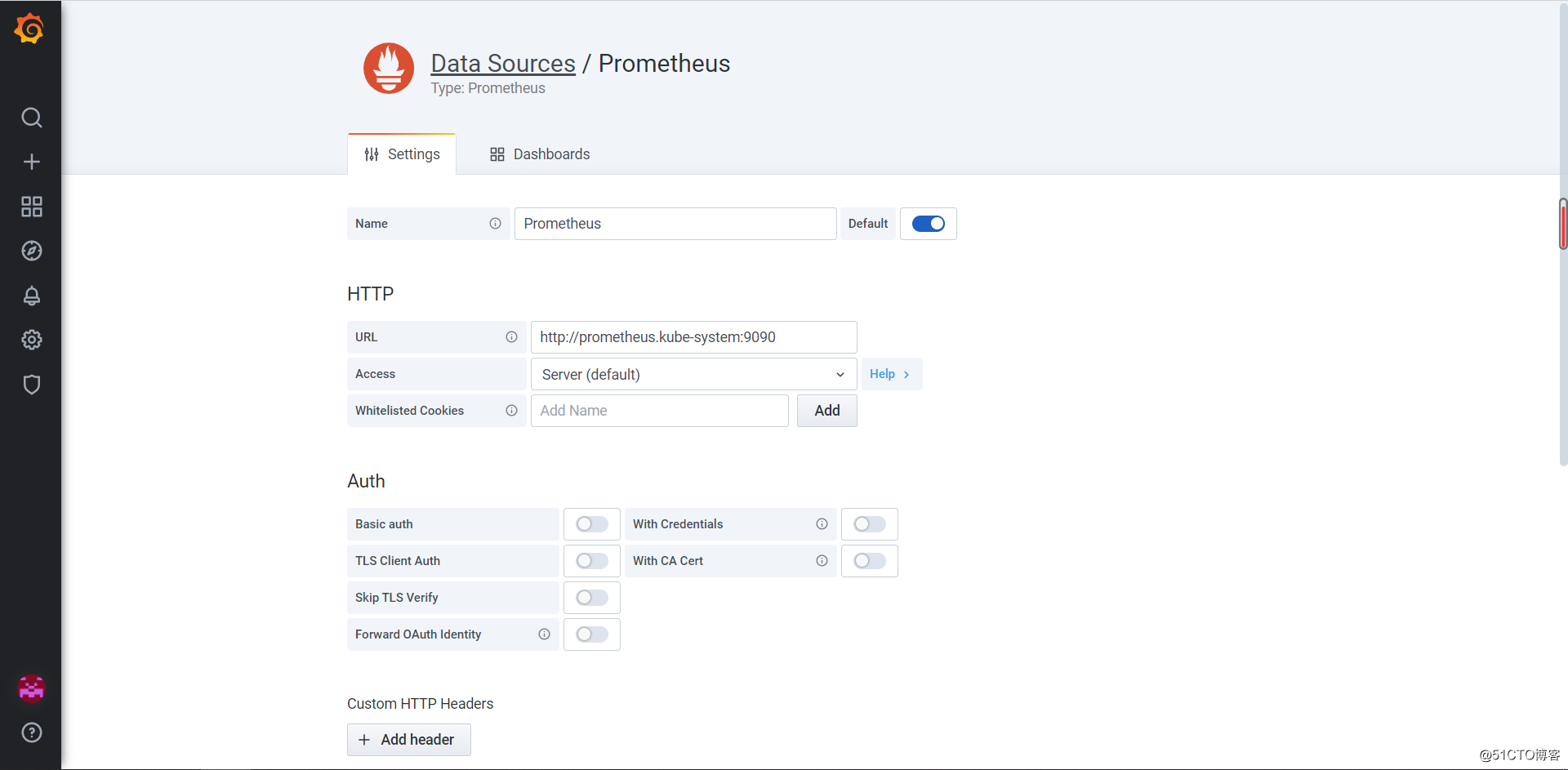

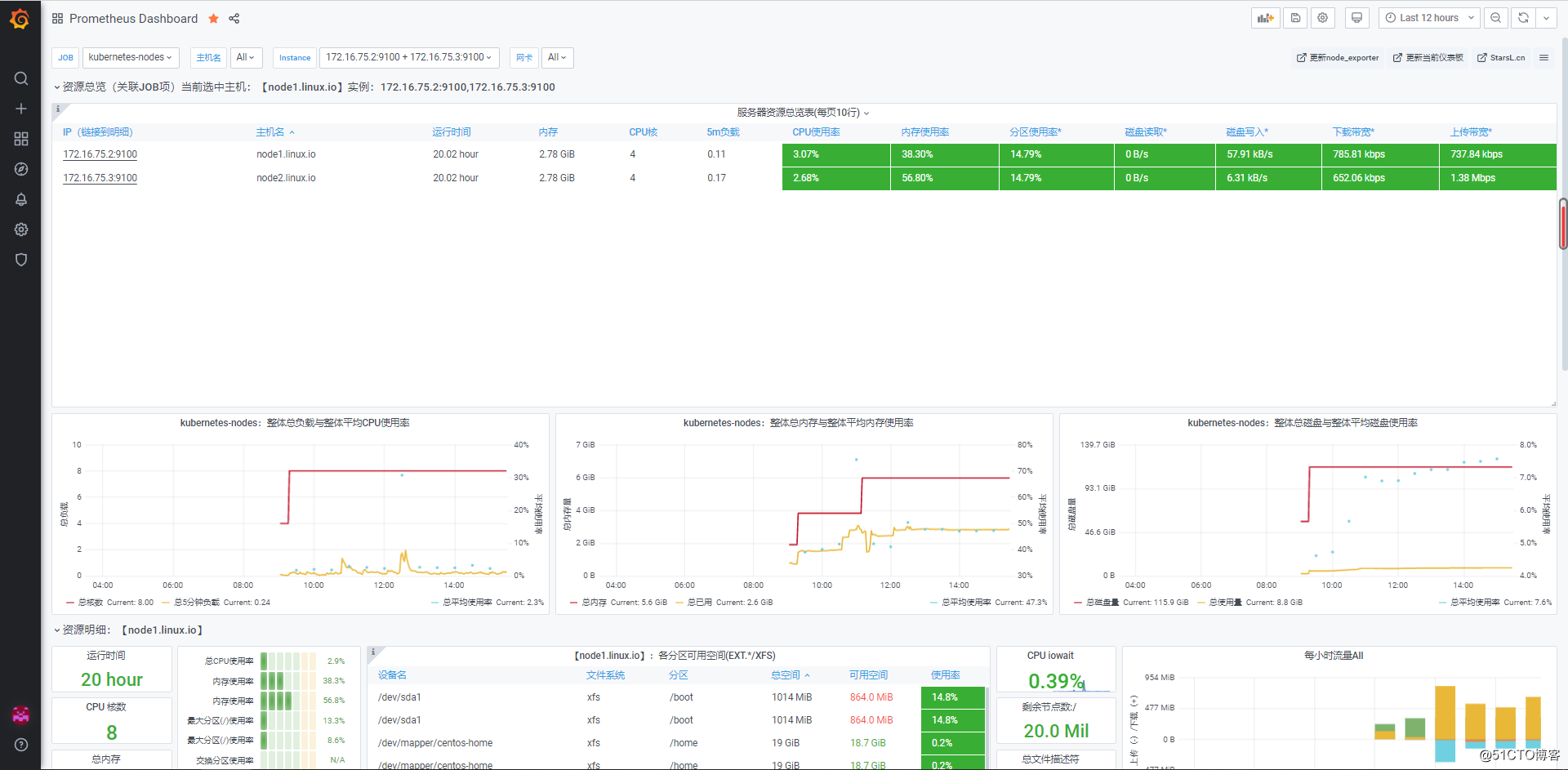

Grafana展示:

连接prometheus

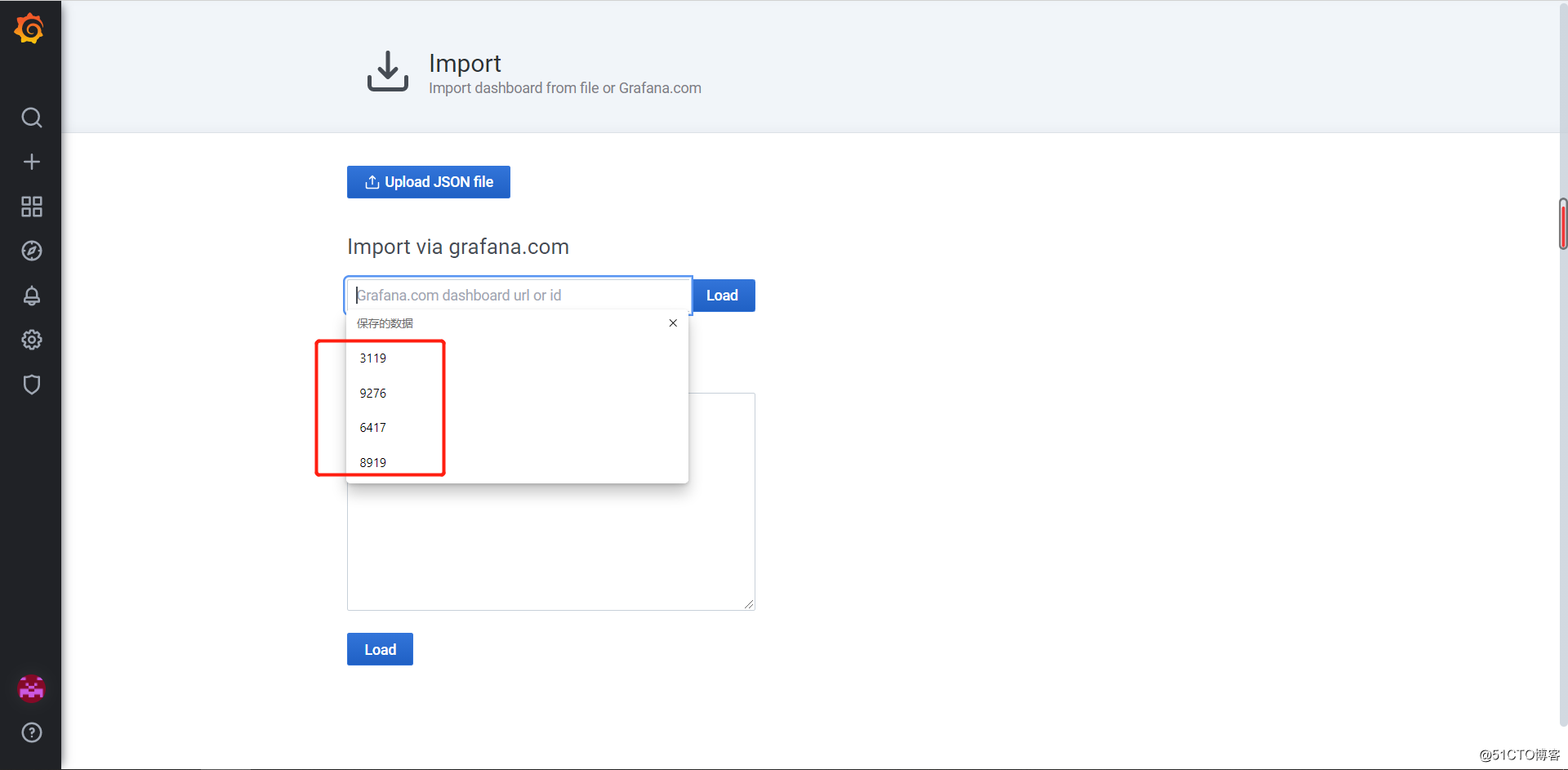

Dashboard

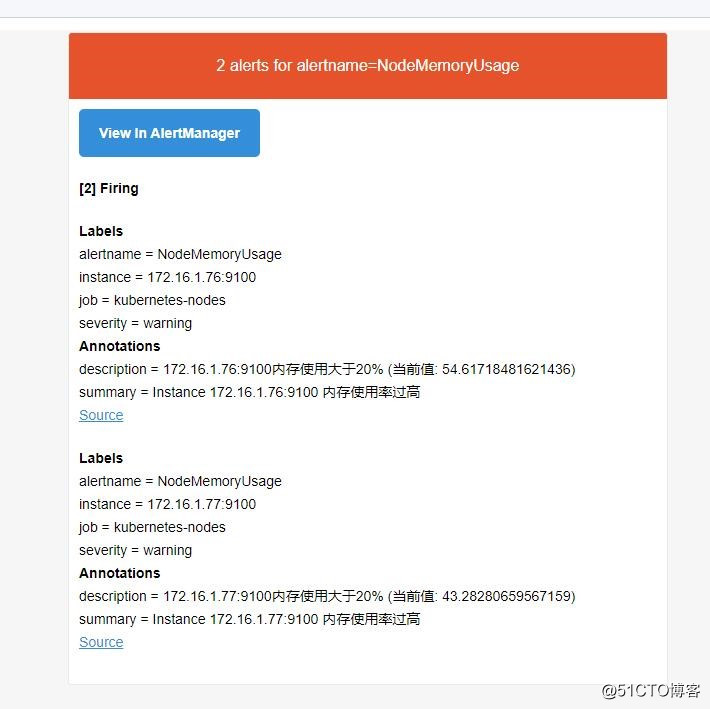

报警展示:

配置报警Rules,修改rule以后apply一下

邮箱展示