Flink Standalone模式部署集群是最简单的一种部署方式,不依赖于其他的组件,另外还支持YARN/Mesos/K8S等模式下的部署

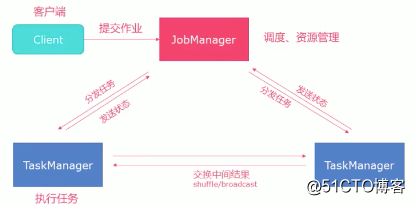

Standalone执行架构图:

1)client客户端提交任务给Jobmanager

2)JobManager负责申请任务运行所需要的资源并管理任务和资源。

3)JobManager分发任务给TaskManager执行

4)TaskManager定期向JobManager汇报状态

1、环境:

10.0.83.71 jobmanager+taskmanager

10.0.83.72 taskmanager

10.0.83.73 taskmanager

systemctl stop firewalld

systemctl disable firewalld

2、修改配置环境,改为实际的集群配置:

sed -i 's/jobmanager.rpc.address: localhost/jobmanager.rpc.address: 10.0.83.71/g' /opt/flink/conf/flink-conf.yaml

sed -i 's/taskmanager.numberOfTaskSlots: 1/taskmanager.numberOfTaskSlots: 2/g' /opt/flink/conf/flink-conf.yaml

#允许通过web提交

sed -i 's/#web.submit.enable: false/web.submit.enable: true/g' /opt/flink/conf/flink-conf.yaml

指定master节点

sed -i 's/localhost:8081/10.0.83.71:8081/g' /opt/flink/conf/masters

指定worker节点

echo -e '10.0.83.71\n10.0.83.72\n10.0.83.73' > /opt/flink/conf/workers

3、配置免密登录

分别在71,72,73上执行:ssh-keygen -t rsa

分别在每台机器上执行copy to其他2个机器地址:

ssh-copy-id 10.0.83.71

ssh-copy-id 10.0.83.72

ssh-copy-id 10.0.83.73

4、代码同步到其他机器

scp -r /opt/flink 10.0.83.72:/opt/

scp -r /opt/flink 10.0.83.73:/opt/

部署hadoop集群

可以参考: https://blog.51cto.com/mapengfei/2546950

在hadoop集群部署完成之后,

hdfs dfs -mkdir -p /wordcount/output

hdfs dfs -mkdir -p /wordcount/input

上传样例数据到hdfs上

hdfs dfs -put /opt/words.txt /wordcount/input

执行flink测试任务:

cd /opt/flink/

bin/flink run examples/batch/WordCount.jar --input hdfs://node1:8020/wordcount/input/words.txt --output hdfs://node1:8020/wordcount/output