import numpy as np

from keras.datasets import mnist

from keras.layers import Dense, Input

from keras.models import Model

img_rows, img_cols = 28, 28

(x_train, _), (x_test, _) = mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_train = x_train.reshape((len(x_train), np.prod(x_train.shape[1:])))

x_test = x_test.reshape((len(x_test), np.prod(x_test.shape[1:])))

input_img = Input(shape=(28 * 28,))

encoded = Dense(500, activation='relu')(input_img)

decoded = Dense(28 * 28, activation='sigmoid')(encoded)

autoencoder = Model(input=input_img, output=decoded)

autoencoder.compile(optimizer='adadelta', loss='binary_crossentropy')

autoencoder.summary()

autoencoder.fit(x_train, x_train,

nb_epoch=10, batch_size=128, shuffle=True, verbose=2,

validation_data=(x_test, x_test))

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) (None, 784) 0

_________________________________________________________________

dense_1 (Dense) (None, 500) 392500

_________________________________________________________________

dense_2 (Dense) (None, 784) 392784

=================================================================

Total params: 785,284

Trainable params: 785,284

Non-trainable params: 0

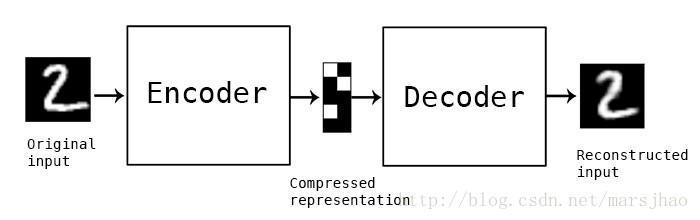

自编码器能够把输入进行编码自学习,然后再解码 单层自编码逻辑比较简单,首先我们创建了一层全连接层采用relu激活函数作为编码层,再采用一层全连接层使用sigmoid作为解码层。然后构建一个自编码器

autoencoder = Model(input=input_img, output=decoded)

这次使用的优化器是adadelta,这个算法是对 Adagrad 的改进,基本不需要我们去设置学习速率