通过上一篇文章对scheduler-framework调度框架已经有了大致了解,根据我们的实际生产的一些问题(如计算服务没有被调度到实际CPU最优的节点)和需求,来实现一个简单的基于CPU指标的自定义调度器。自定义调度器通过kubernetes资源指标服务

通过上一篇文章对scheduler-framework调度框架已经有了大致了解,根据我们的实际生产的一些问题(如计算服务没有被调度到实际CPU最优的节点)和需求,来实现一个简单的基于CPU指标的自定义调度器。自定义调度器通过kubernetes资源指标服务metrics-server来获取各节点的当前的资源情况,并进行打分,然后把Pod调度到分数最高的节点

PreFilter扩展点

对Pod的信息进行预处理,检查Pod或集群是否满足前提条件。

通过pod已声明的Annotations参数 rely.on.namespaces/name 和 rely.on.pod/labs来获取该pod依赖的pod是否就绪,如依赖的pod未就绪,则终止调度,pod处于Pending状态。

func (pl *sample) PreFilter(ctx context.Context, state *framework.CycleState, p *v1.Pod) *framework.Status {

namespace := p.Annotations["rely.on.namespaces/name"]

podLabs := p.Annotations["rely.on.pod/labs"]

if namespace == "" || podLabs == "" {

return framework.NewStatus(framework.Success, "ont rely")

}

if prefilter.IsExist(namespace) == false {

return framework.NewStatus(framework.Unschedulable, "not found namespace: "+namespace)

}

if prefilter.IsReady(namespace, podLabs) == false {

return framework.NewStatus(framework.Unschedulable, "rely pod not ready")

}

klog.Infoln("rely pod is ready :", namespace, podLabs, prefilter.IsReady(namespace, podLabs))

return framework.NewStatus(framework.Success, "rely pod is ready")

}调度失败结果:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 9s sample-scheduler 0/2 nodes are available: 2 rely pod not ready.

Warning FailedScheduling 9s sample-scheduler 0/2 nodes are available: 2 rely pod not ready.如满足Pod的前置条件,则正常调度,进入下一阶段

I1025 12:26:59.435773 1 plugins.go:50] rely pod is ready : kube-system k8s-app=metrics-server trueFilter扩展点

对不满足Pod调度要求的节点进行过滤掉。

func (pl *sample) Filter(ctx context.Context, state *framework.CycleState, pod *v1.Pod, node *framework.NodeInfo) *framework.Status {

if node.Node().Labels["cpu"] != "true" {

return framework.NewStatus(framework.Unschedulable, "not found labels")

}

nodeUsedCPU, nodeUsedMen, nodeCPU, nodeMen, cpuRate, menRate := filter.ResourceStatus(node.Node().Name)

for i := 0; i < len(pod.Spec.Containers); i++ {

requestsCPUCore, _ := strconv.ParseFloat(strings.Replace(pod.Spec.Containers[i].Resources.Requests.Cpu().String(), "n", "", 1), 64)

requestsCPU := requestsCPUCore * 1000 * (1000 * 1000)

requestsMen := pod.Spec.Containers[i].Resources.Requests.Memory().Value() / 1024 / 1024

limitsCPUCore, _ := strconv.ParseFloat(strings.Replace(pod.Spec.Containers[i].Resources.Limits.Cpu().String(), "n", "", 1), 64)

limitsCPU := limitsCPUCore * 1000 * (1000 * 1000)

limitsMen := pod.Spec.Containers[i].Resources.Limits.Memory().Value() / 1024 / 1024

if requestsCPU > float64(nodeCPU) || requestsMen > nodeMen {

return framework.NewStatus(framework.Unschedulable, "out of Requests resources")

}

if limitsCPU > float64(nodeCPU) || limitsMen > nodeMen {

return framework.NewStatus(framework.Unschedulable, "out of Limits resources")

}

if requestsCPU > float64(nodeCPU)-nodeUsedCPU || requestsMen > (nodeMen-nodeUsedMen) {

return framework.NewStatus(framework.Unschedulable, "out of Requests resources system")

}

if limitsCPU > float64(nodeCPU)-nodeUsedCPU || limitsMen > (nodeMen-nodeUsedMen) {

return framework.NewStatus(framework.Unschedulable, "out of Limits resources system")

}

}

klog.Infof("node:%s, CPU:%v , Memory: %v", node.Node().Name, cpuRate, menRate)

cpuThreshold := filter.GetEnvFloat("CPU_THRESHOLD", 0.85)

menThreshold := filter.GetEnvFloat("MEN_THRESHOLD", 0.85)

if cpuRate > cpuThreshold || menRate > menThreshold {

return framework.NewStatus(framework.Unschedulable, "out of system resources")

}

return framework.NewStatus(framework.Success, "Node: "+node.Node().Name)

}过滤掉没有 cpu=true labels的节点;

Pod调度资源值和限制资源值大于节点当前可用资源的节点,则过滤;

默认过滤cpu内存使用率超过 85%的节点。

CPU_THRESHOLD 和 MEN_THRESHOLD 环境变量设置该值。

调度失败结果:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 9m33s sample-scheduler 0/2 nodes are available: 1 Insufficient cpu, 1 out of Limits resources system.

Warning FailedScheduling 9m33s sample-scheduler 0/2 nodes are available: 1 Insufficient cpu, 1 out of Limits resources system.可以通过日志查看当前获取到的系统资源利用率

I1025 12:29:21.360979 1 plugins.go:87] node:k8s-test09, CPU:0.06645718025 , Memory: 0.33549941504789904

I1025 12:29:21.361471 1 plugins.go:87] node:k8s-test10, CPU:0.0411259705 , Memory: 0.15946609553600768Score扩展点

用于对已通过过滤阶段的节点进行打分。

func (pl *sample) Score(ctx context.Context, state *framework.CycleState, p *v1.Pod, nodeName string) (int64, *framework.Status) {

isSamePod := score.IsSamePod(nodeName, p.Namespace, p.Labels) // max 2

cpuLoad := score.CPURate(nodeName) // max 3

menLoad := score.MemoryRate(nodeName) // max 3

core := score.CpuCore(nodeName) // max 3

c := isSamePod + cpuLoad + core + menLoad

klog.Infoln(nodeName+" score is :", c)

return c, framework.NewStatus(framework.Success, nodeName)

}打分规则:

- 配置高的节点权重大

- 当前资源使用率底的节点权重大

- 运行多组pod的情况下,运行相同pod的节点权重底

- 如果Score插件的打分结果不是[0-100]范围内的整数,则调用NormalizeScore进行归一化。

调度结果:

I1025 12:28:35.620198 1 plugins.go:105] k8s-test10 score is : 6

I1025 12:28:35.626170 1 plugins.go:105] k8s-test09 score is : 7

I1025 12:28:35.626394 1 trace.go:205] Trace[232544630]: "Scheduling" namespace:default,name:test-scheduler-84859f9467-rgfpd (25-Oct-2020 12:28:35.488) (total time: 137ms):

Trace[232544630]: ---"Computing predicates done" 38ms (12:28:00.526)

Trace[232544630]: ---"Prioritizing done" 99ms (12:28:00.626)

Trace[232544630]: [137.453956ms] [137.453956ms] END

I1025 12:28:35.626551 1 default_binder.go:51] Attempting to bind default/test-scheduler-84859f9467-rgfpd to k8s-test09

I1025 12:28:35.632736 1 eventhandlers.go:205] delete event for unscheduled pod default/test-scheduler-84859f9467-rgfpd

I1025 12:28:35.632893 1 scheduler.go:597] "Successfully bound pod to node" pod="default/test-scheduler-84859f9467-rgfpd" node="k8s-test09" evaluatedNodes=2 feasibleNodes=2

I1025 12:28:35.633369 1 eventhandlers.go:225] add event for scheduled pod default/test-scheduler-84859f9467-rgfpd

I1025 12:29:21.328791 1 eventhandlers.go:173] add event for unscheduled pod default/test-scheduler-84859f9467-48wlr

I1025 12:29:21.328897 1 scheduler.go:452] Attempting to schedule pod: default/test-scheduler-84859f9467-48wlr

I1025 12:29:21.351126 1 plugins.go:50] rely pod is ready : kube-system k8s-app=metrics-server true

I1025 12:29:21.360979 1 plugins.go:87] node:k8s-test09, CPU:0.06645718025 , Memory: 0.33549941504789904

I1025 12:29:21.361471 1 plugins.go:87] node:k8s-test10, CPU:0.0411259705 , Memory: 0.15946609553600768

I1025 12:29:21.419137 1 plugins.go:105] k8s-test10 score is : 6

I1025 12:29:21.419210 1 plugins.go:105] k8s-test09 score is : 5

I1025 12:29:21.419407 1 default_binder.go:51] Attempting to bind default/test-scheduler-84859f9467-48wlr to k8s-test10

I1025 12:29:21.427243 1 scheduler.go:597] "Successfully bound pod to node" pod="default/test-scheduler-84859f9467-48wlr" node="k8s-test10" evaluatedNodes=2 feasibleNodes=2

I1025 12:29:21.427441 1 eventhandlers.go:205] delete event for unscheduled pod default/test-scheduler-84859f9467-48wlr

I1025 12:29:21.427472 1 eventhandlers.go:225] add event for scheduled pod default/test-scheduler-84859f9467-48wlr 完整的示例代码:

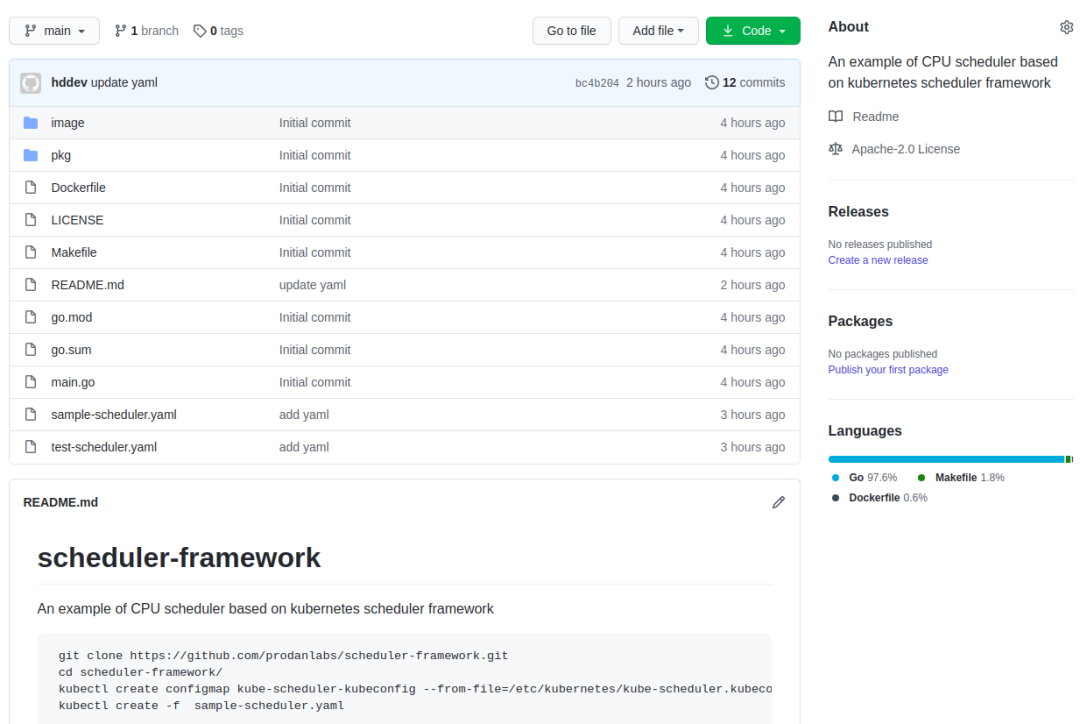

https://github.com/prodanlabs/scheduler-framework

感兴趣的读者可以关注下微信号