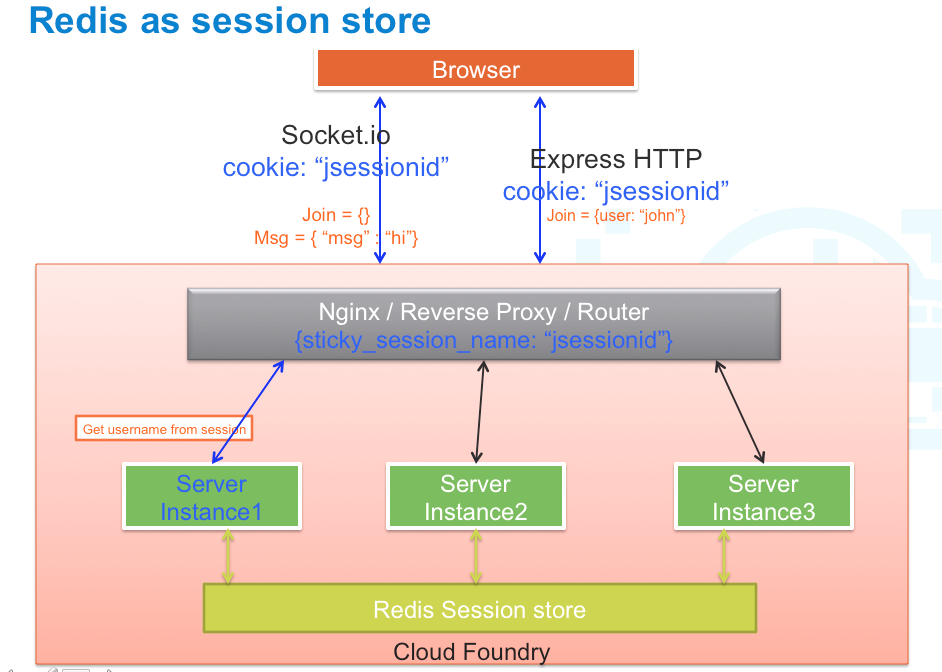

A few days back, I was just messing around with Redis and all of a sudden there was a necessity where I had to externalize the sessions from Apache Tomcat. Redis seemed to be a great option for this. It took a bit of tweaking, but by the end, things were as smooth as ever. I thought of designing the architecture to show how Redis can help us by being the ultimate session store with really low latency. The diagram below depicts the entire scenario with great precision.

Here's my explanation for the diagram above:

1、Any request coming from the internet would be received by the web server first. The web server is running with a load balancer configured.

2、There would be multiple Tomcat instances running across the app layer which would be connected with the common Redis Server (I have not looked into Redis Cluster so am not mentioning it here).

3、Next we would follow the traditional approach; any request coming from the internet would go through the load balancer which would then decide which container to send it to.

4、Since different containers are connected to the same Redis server, and we have externalized the session management, even if one server goes down the sessions will remain intact and the other Tomcat containers would replace any downed servers, leaving behind a great experience. This model could be scaled pretty well.

This approach can easily scale up the web application and also is easy to set up. I would write a detailed article about the setup in some time.