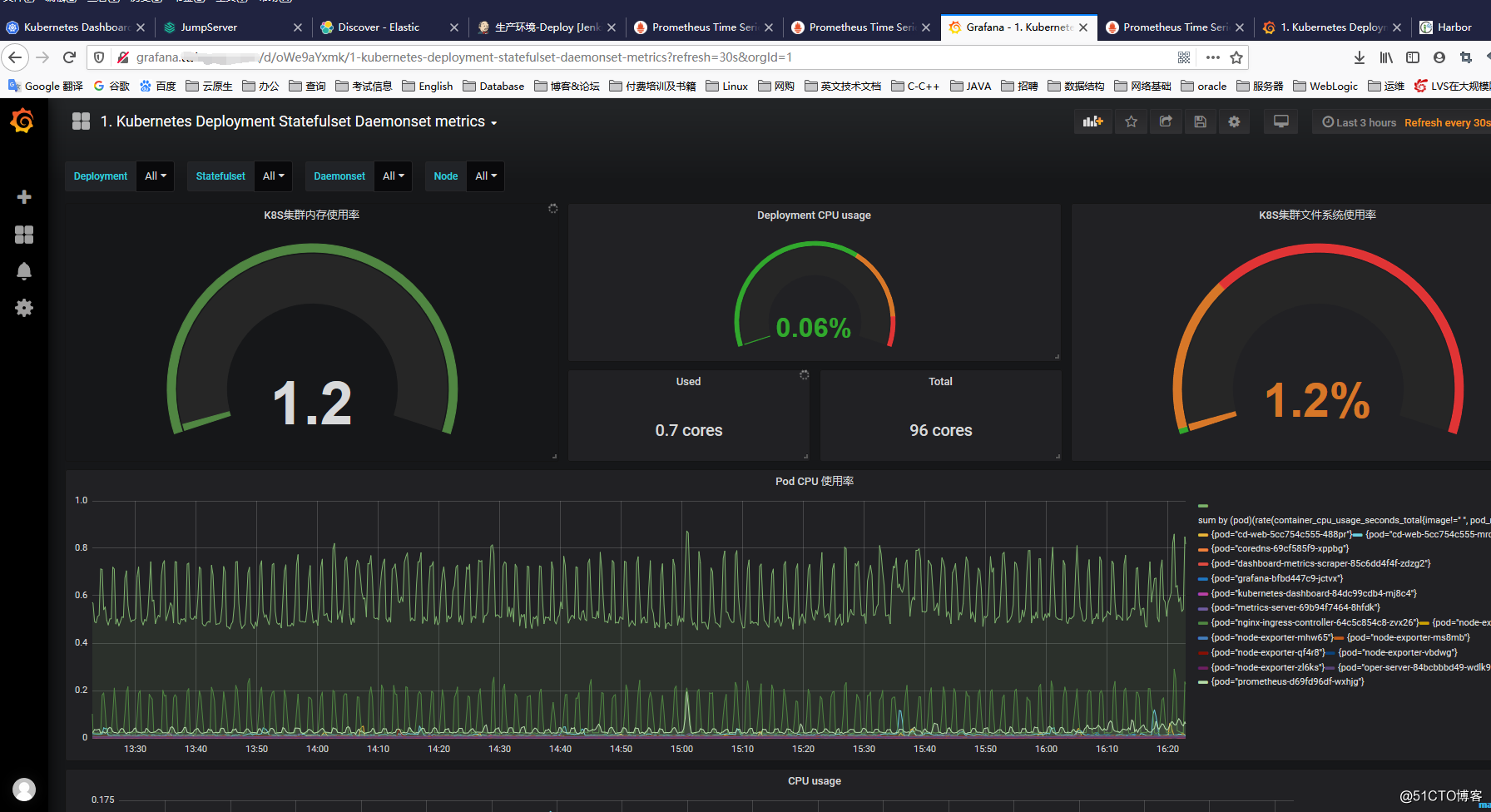

K8S中的各个Node及Pod如何监控,业界常用的方案基本都是:Prometheus + Grafana

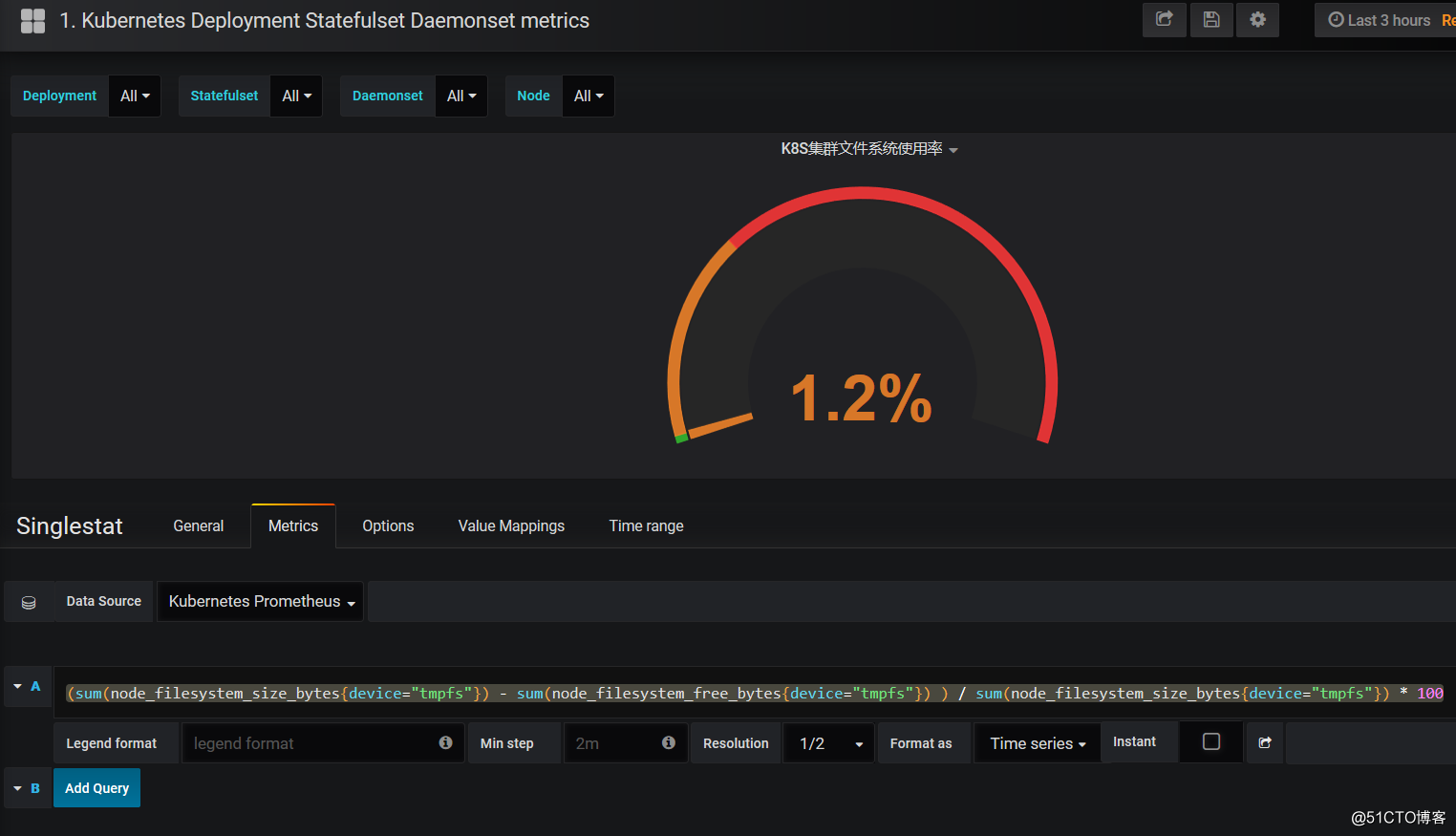

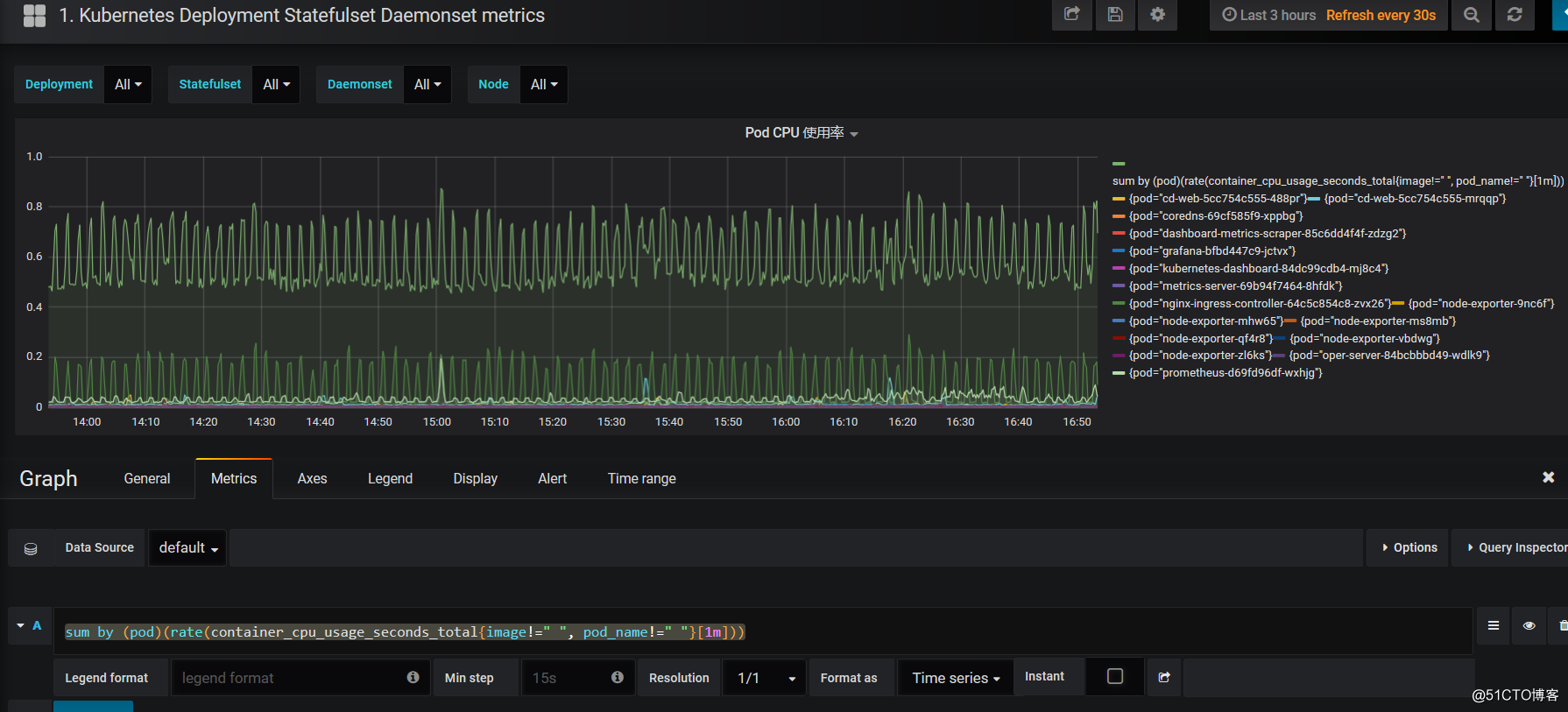

来先看看整体效果如下:

一.Prometheus 部署

#创建configmap;默认这里都是9090的端口,因为9090的端口已被其他服务占用,所以我改了端口

cat prometheus.configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'kubernetes-node'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

#各个Node节点需挂载NFS网盘

1.首先各个Node节点需要安装nfs-utils

2.NFS Server 新增目录/data/k8s_data/prometheus/k8s-vloume并修改/etc/exports权限

3.挂载NFS

mount -t nfs 192.168.1.115:/data/k8s_data/prometheus/k8s-vloume /data/k8s_data/prometheus/

以上操作可是用Ansible统一操作

#申明PV及创建PVC,这里将Prometheus的数据持久化的内网的NFS网盘中;

**cat prometheus-volume.yaml

**

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.1.115

path: /data/k8s_data/prometheus/k8s-vloume

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus

namespace: kube-system

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

#创建Deployment及Service

cat prometheus.deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: kube-system

labels:

app: prometheus

spec:

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus

containers:

- image: harbor.xxxxx.com/prom/prometheus:v2.4.3 #这里私仓地址,修改为自己的或docker hub中可下载

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=30d"

- "--web.enable-admin-api" # 控制对admin HTTP API的访问,其中包括删除时间序列等功能

- "--web.enable-lifecycle" # 支持热更新,直接执行localhost:9090/-/reload立即生效

ports:

- containerPort: 9090

protocol: TCP

name: http

volumeMounts:

- mountPath: "/prometheus"

subPath: prometheus

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 512Mi

limits:

cpu: 100m

memory: 512Mi

securityContext:

runAsUser: 0

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus

- configMap:

name: prometheus-config

name: config-volume

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: prometheus

labels:

app: prometheus

spec:

type: NodePort

selector:

app: prometheus

ports:

- port: 9091

protocol: TCP

targetPort: 9090

nodePort: 39091

#创建授权规则

cat prometheus-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

#执行

kubectl apply -f .

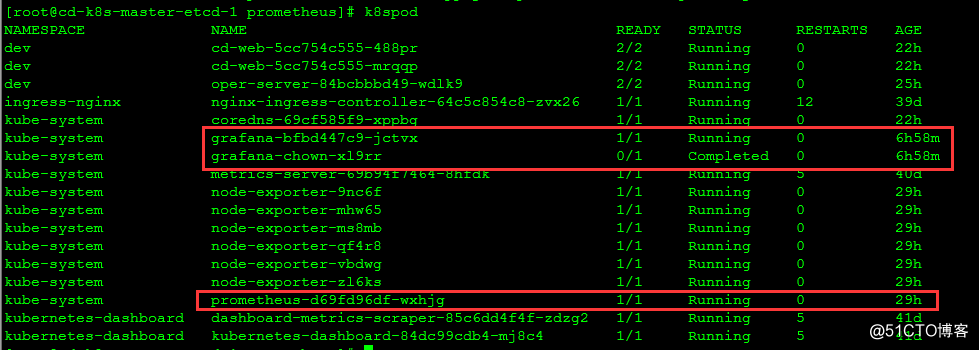

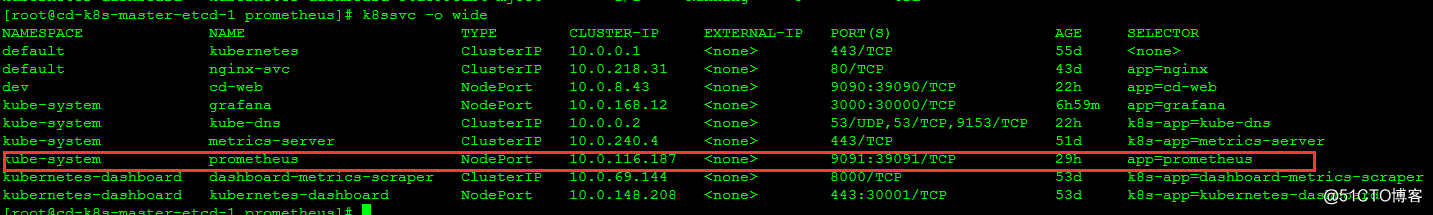

#查看部署情况

#查看Service情况

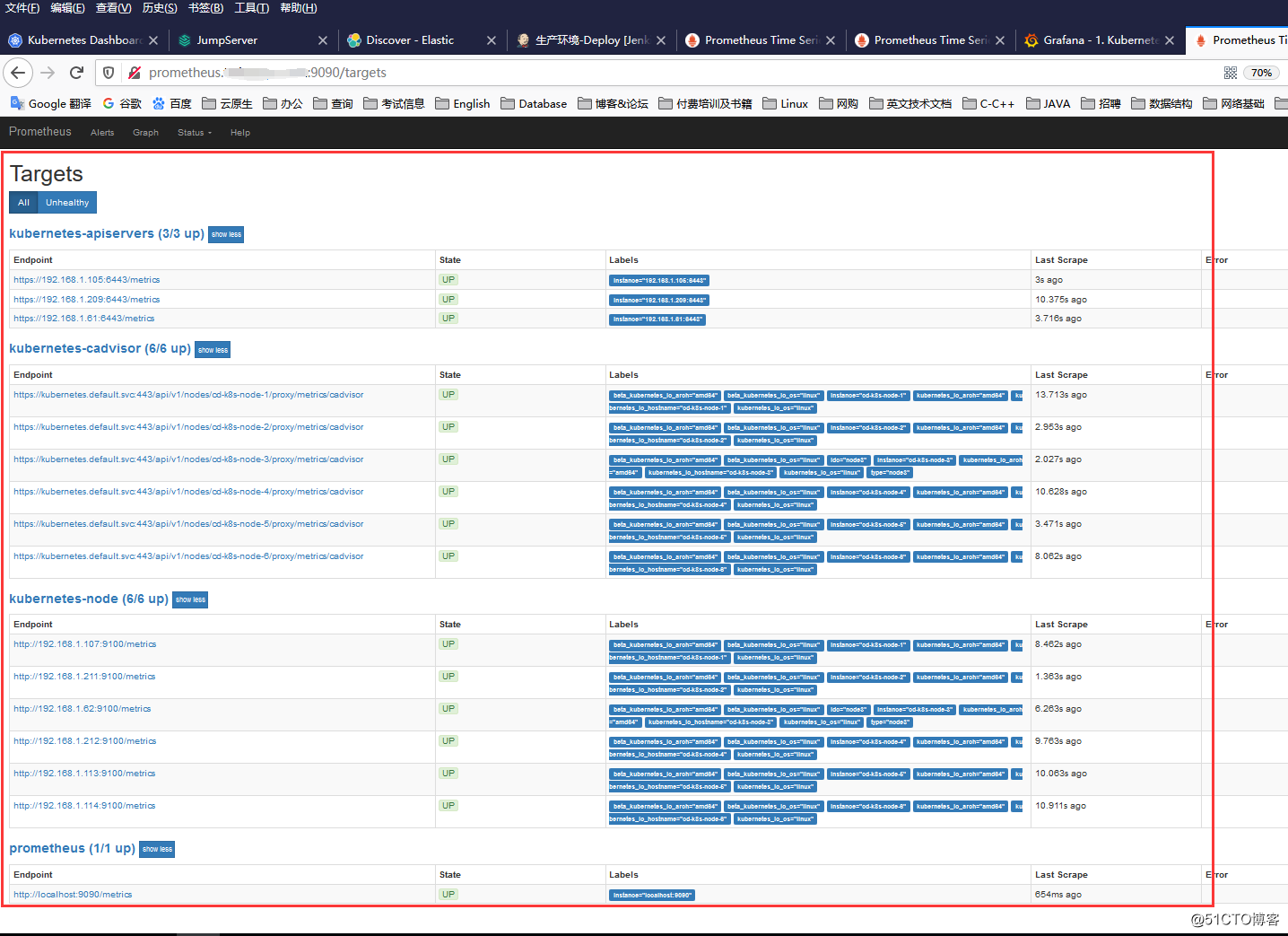

#进行访问验证

#我这里使用了nginx及内网的DNS域名进行绑定也可直接访问Node+NodePort访问,也还可以使用Ingress进行配置

我这里输入:

http://prometheus.xxxx.com:9090/targets

#监控K8S的Node节点,需要部署node-exporter,这里使用DaemonSet,使每个Node节点都部署

cat prometheus-node-exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

name: node-exporter

k8s-app: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

app: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- name: node-exporter

image: harbor.xxx.com/prom/node-exporter:v0.16.0

ports:

- containerPort: 9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

#执行

kubectl apply -f prometheus-node-exporter.yaml

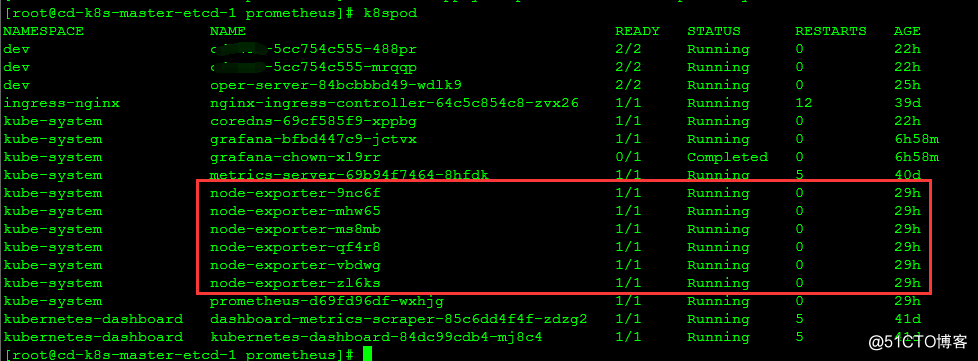

#查看

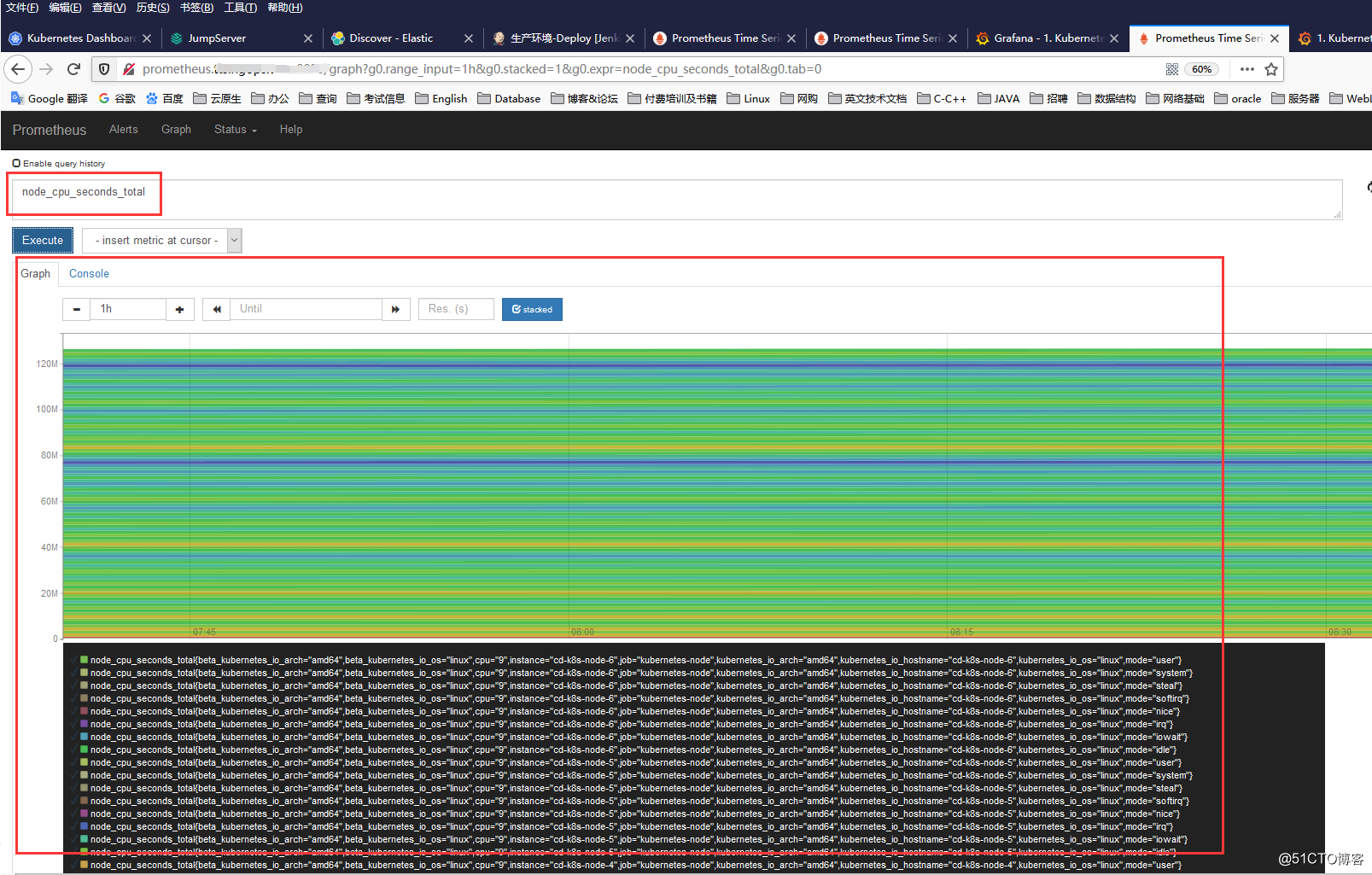

#查看数据情况

二、Grafana部署

#申明PV及创建PVC

cat grafana_volume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.1.115

path: /data/k8s_data/grafana

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana

namespace: kube-system

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

#创建Deployment ,里面有Grafana的用户名及密码

cat grafana_deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

k8s-app: grafana

spec:

selector:

matchLabels:

k8s-app: grafana

app: grafana

revisionHistoryLimit: 10

template:

metadata:

labels:

app: grafana

k8s-app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:5.3.4

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: grafana

env:

- name: GF_SECURITY_ADMIN_USER

value: admin

- name: GF_SECURITY_ADMIN_PASSWORD

value: admin

readinessProbe:

failureThreshold: 10

httpGet:

path: /api/health

port: 3000

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 30

livenessProbe:

failureThreshold: 3

httpGet:

path: /api/health

port: 3000

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

cpu: 300m

memory: 1024Mi

requests:

cpu: 300m

memory: 1024Mi

volumeMounts:

- mountPath: /var/lib/grafana

subPath: grafana

name: storage

securityContext:

fsGroup: 472

runAsUser: 472

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana

#创建临时目录授权

cat grafana_job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: grafana-chown

namespace: kube-system

spec:

template:

spec:

restartPolicy: Never

containers:

- name: grafana-chown

command: ["chown", "-R", "472:472", "/var/lib/grafana"]

image: harbor.xxxxx.com/busybox/busybox:1.28

imagePullPolicy: IfNotPresent

volumeMounts:

- name: storage

subPath: grafana

mountPath: /var/lib/grafana

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana

#创建Service

cat grafana_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

spec:

type: NodePort

selector:

app: grafana

ports:

- port: 3000

protocol: TCP

targetPort: 3000

nodePort: 30000

#执行

kubectl apply -f .

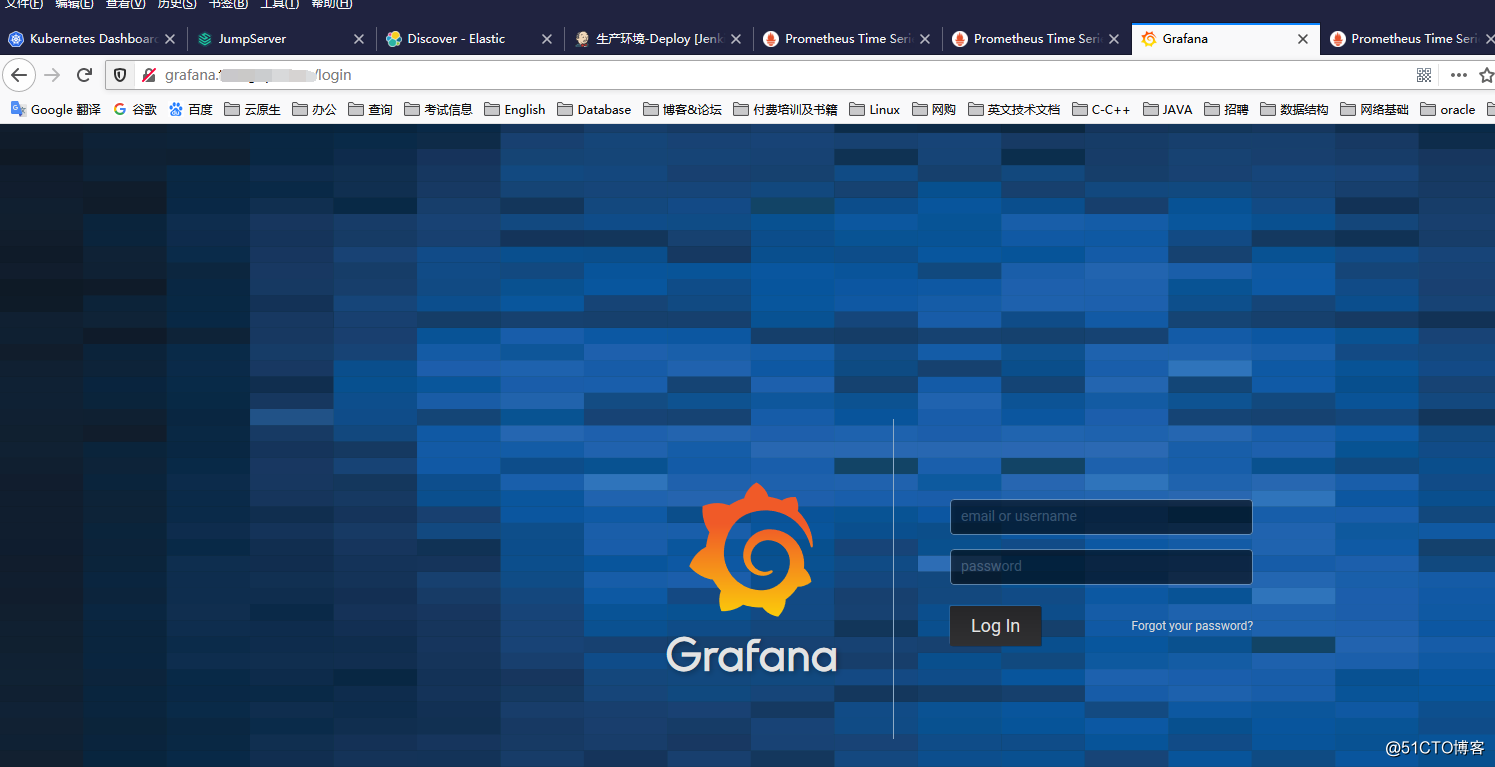

#访问Grafana,这里也使用了内网DNS域名及Nginx

输入admin/admin,然后修改新密码

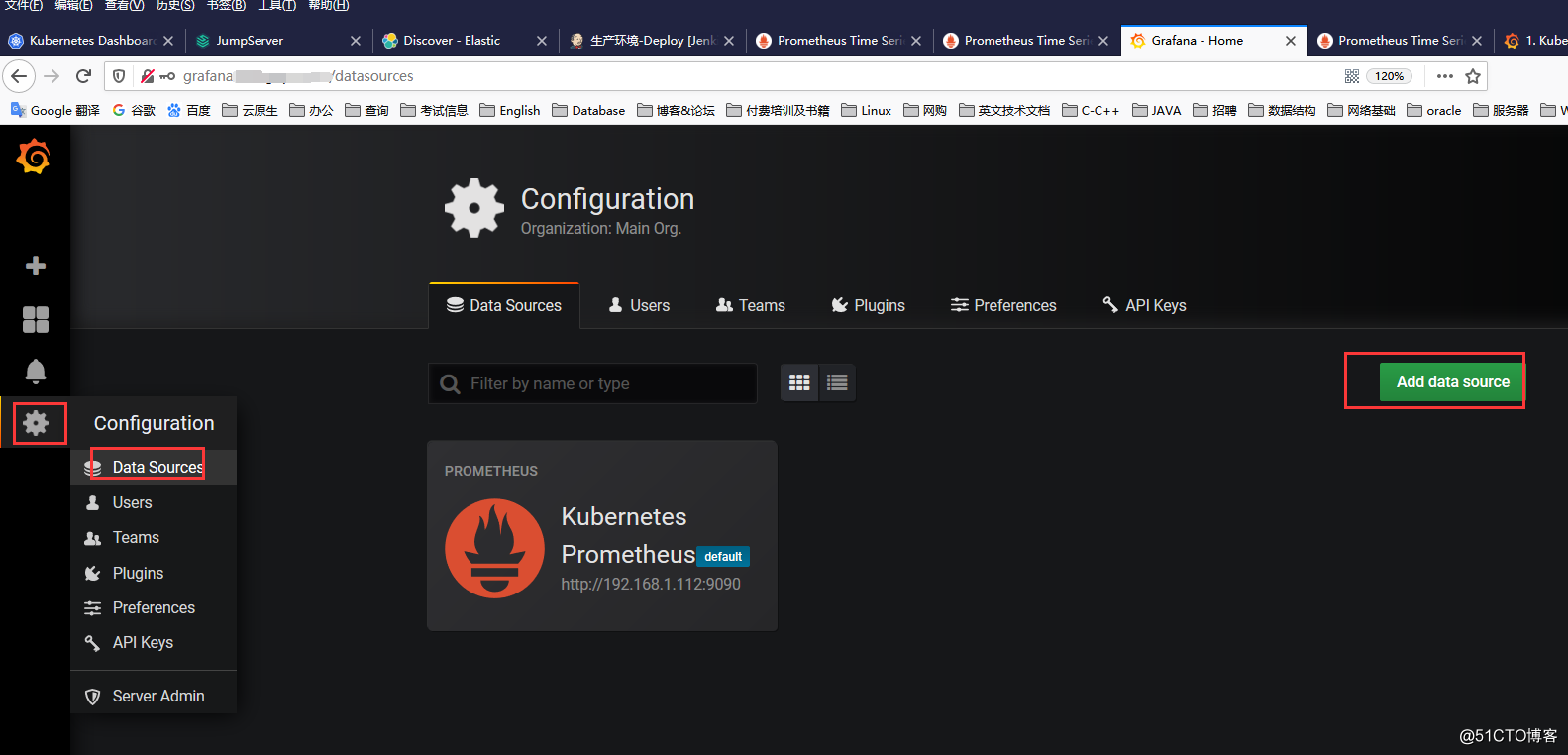

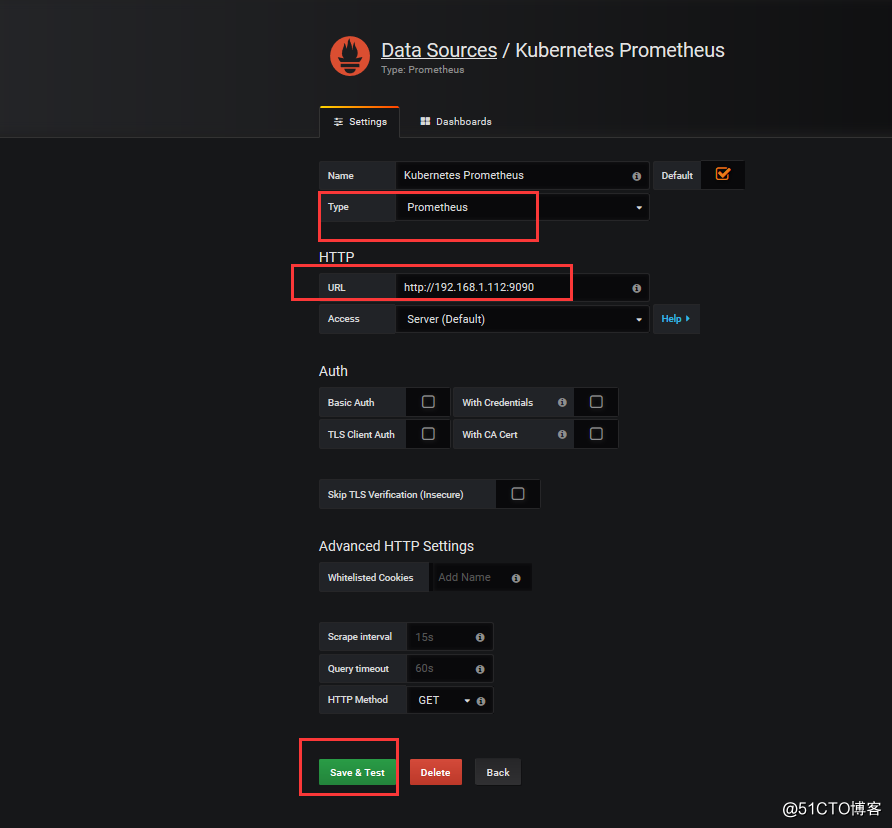

#添加Prometheus数据源

选择Type为Prometheus 、填写url(我这里填写了url总是无法测试通过,最后填写了IP地址)

最后Save & Test 保存即可

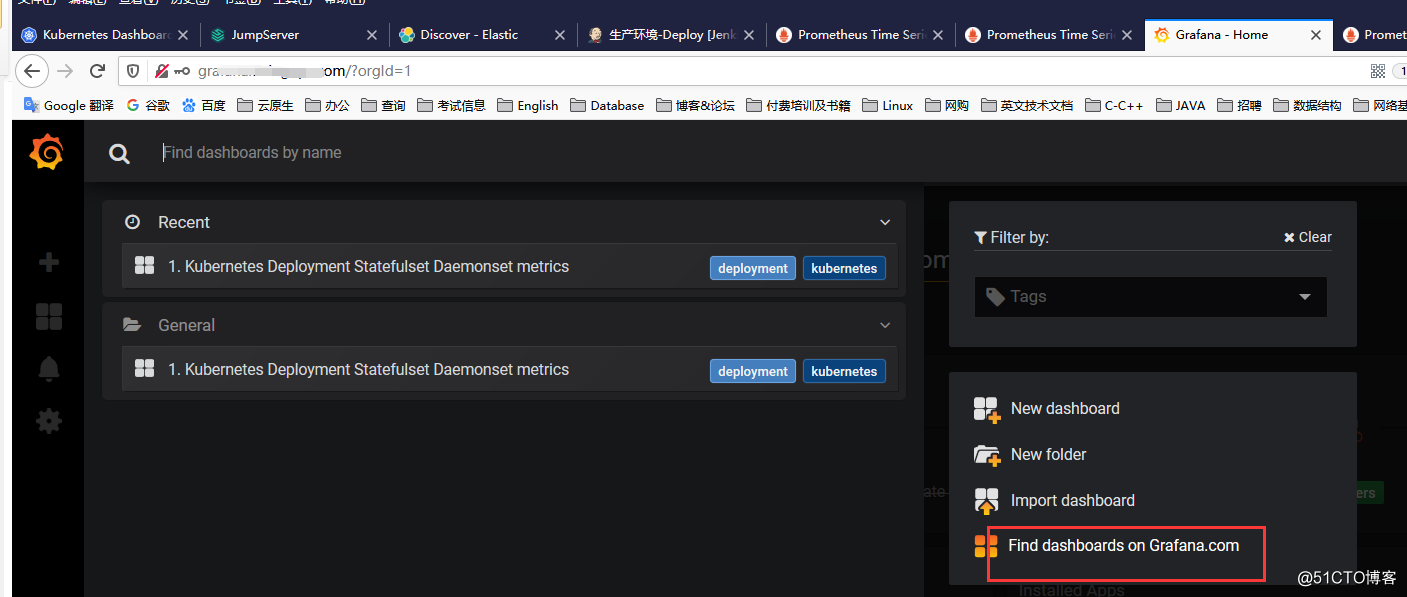

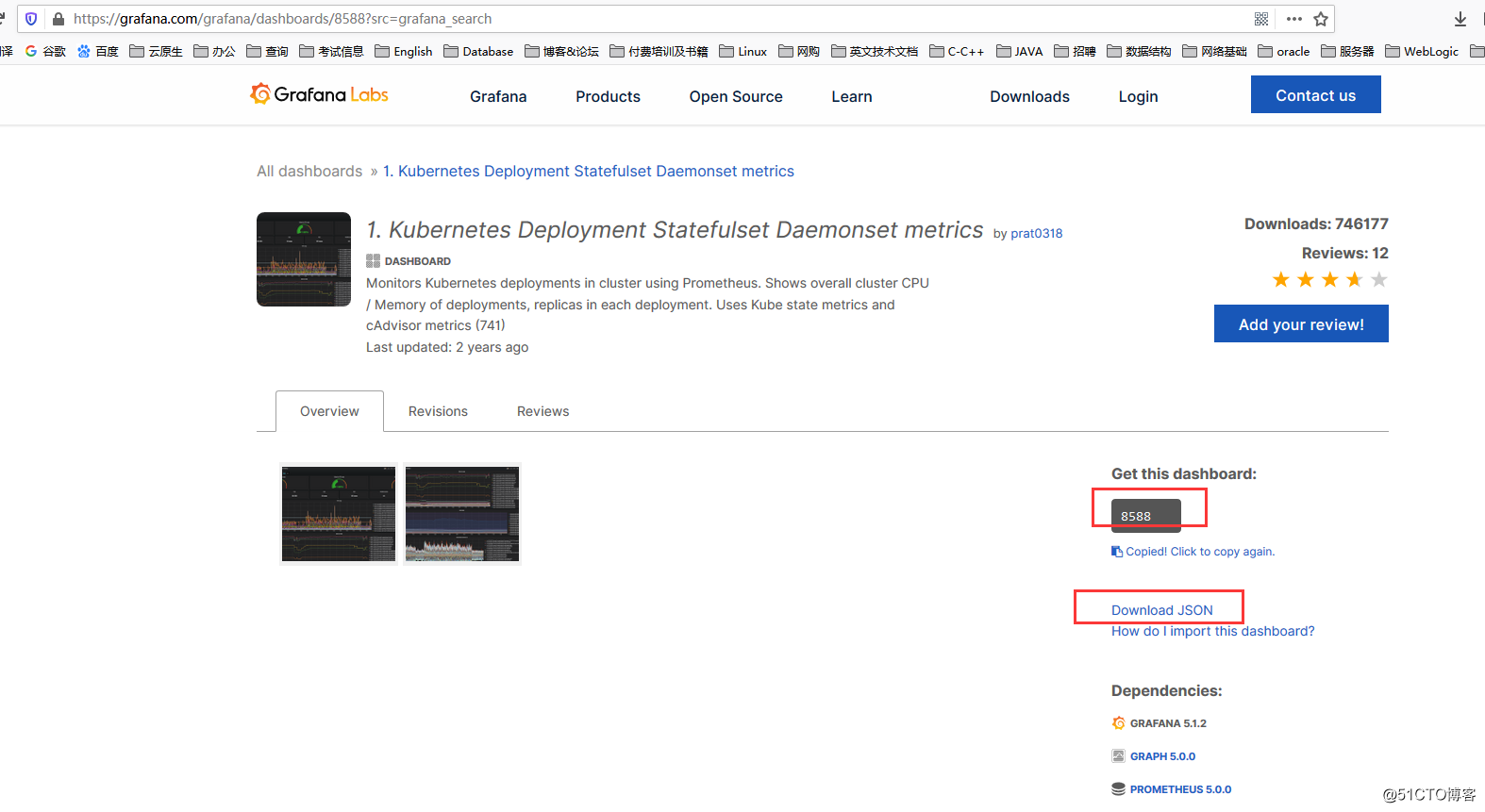

三、添加Kubernetes 模板

搜索:Kubernetes Deployment Statefulset Daemonset metrics模板;将其导入

也可以将其模板下载下来,进行导入 亦或者输入模板的Id:8858

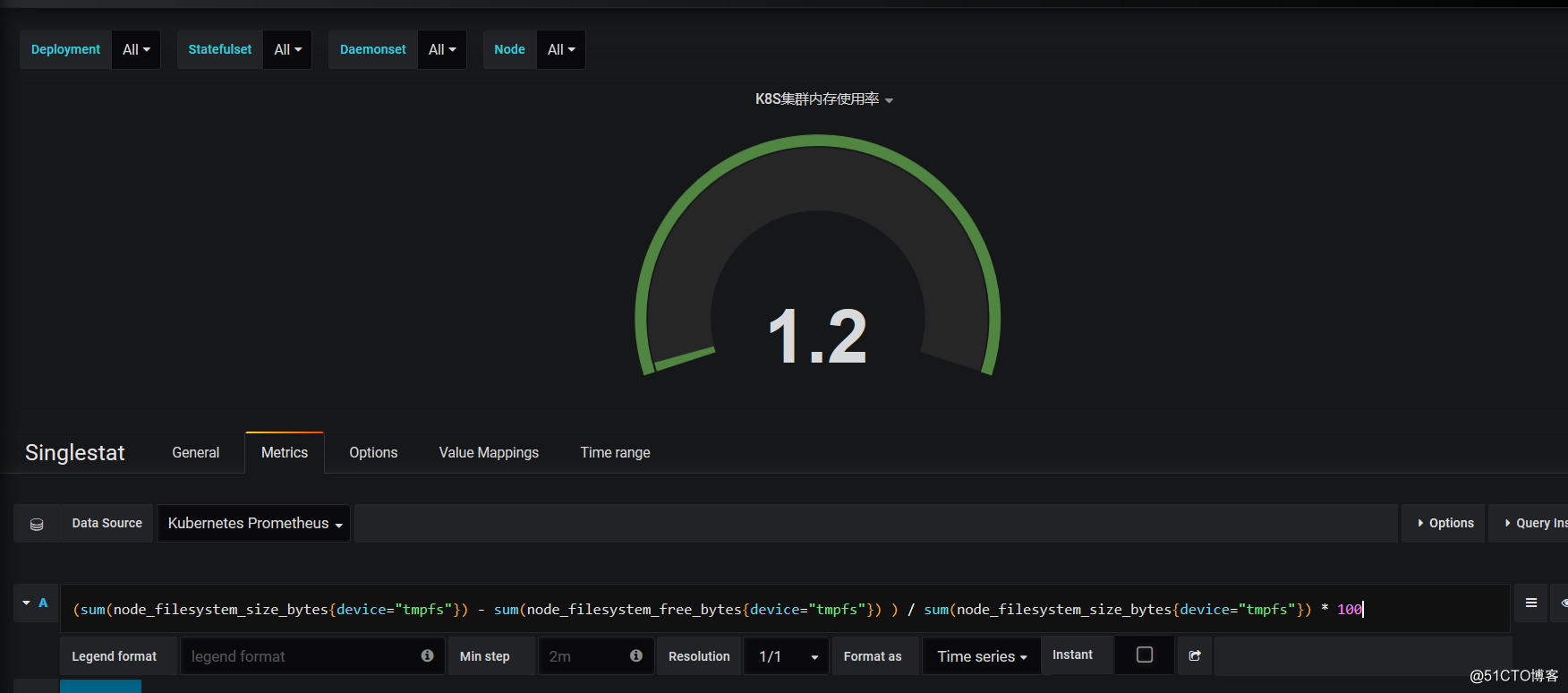

#配置K8S集群内存使用率

(sum(node_filesystem_size_bytes{device="tmpfs"}) - sum(node_filesystem_free_bytes{device="tmpfs"}) ) / sum(node_filesystem_size_bytes{device="tmpfs"}) * 100

#配置K8S 集群文件系统使用率

(sum(node_filesystem_size_bytes{device="tmpfs"}) - sum(node_filesystem_free_bytes{device="tmpfs"}) ) / sum(node_filesystem_size_bytes{device="tmpfs"}) * 100

#配置Pod CPU使用率

sum by (pod)(rate(container_cpu_usage_seconds_total{image!=" ", pod_name!=" "}[1m]))

后面基本上都是写PromSQL,这个SQL语句都可以从Prometheus提取数据指标,可以根据情况进行编写

可

后续思考:

1、可结合Alert进行监控告警

2、持久化数据放到时序数据库(如OpenTSDB)

【参考资料】

https://grafana.com/docs/grafana/v5.3/features/datasources/prometheus/

https://prometheus.io/docs/concepts/data_model/#metric-names-and-labels