from keras.datasets import mnist

from keras.layers import Dense, Flatten, Conv2D, MaxPooling2D, Dropout

from keras.models import Sequential

from keras.optimizers import RMSprop

from keras.utils import np_utils

img_rows, img_cols = 28, 28

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train.reshape(X_train.shape[0], img_rows, img_cols, 1)

X_test = X_test.reshape(X_test.shape[0], img_rows, img_cols, 1)

input_shape = (img_rows, img_cols, 1)

X_train = X_train.astype('float32') / 255.

X_test = X_test.astype('float32') / 255.

Y_train = np_utils.to_categorical(y_train, 10)

Y_test = np_utils.to_categorical(y_test, 10)

model = Sequential()

model.add(Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=input_shape))

model.add(MaxPooling2D())

model.add(Dropout(0.2))

model.add(Conv2D(64, kernel_size=(3, 3), activation='relu'))

model.add(MaxPooling2D())

model.add(Dropout(0.2))

model.add(Conv2D(128, kernel_size=(3, 3), activation='relu'))

model.add(MaxPooling2D())

model.add(Flatten())

model.add(Dropout(0.2))

model.add(Dense(625, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))

opt = RMSprop()

model.compile(optimizer=opt, loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()

history = model.fit(X_train, Y_train, nb_epoch=10, batch_size=128, shuffle=True, verbose=2,

validation_data=(X_test, Y_test))

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 26, 26, 32) 320

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 13, 13, 32) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 13, 13, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 11, 11, 64) 18496

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 5, 5, 64) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 5, 5, 64) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 3, 3, 128) 73856

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 1, 1, 128) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 128) 0

_________________________________________________________________

dropout_3 (Dropout) (None, 128) 0

_________________________________________________________________

dense_1 (Dense) (None, 625) 80625

_________________________________________________________________

dropout_4 (Dropout) (None, 625) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 6260

=================================================================

Total params: 179,557

Trainable params: 179,557

Non-trainable params: 0

卷积深度神经网络在深度神经网络的基础上,加入了卷积层和池化层。网络的第一层是二维卷积层

Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=input_shape)

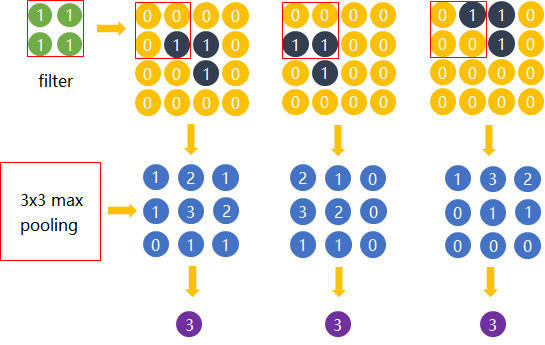

这一层的卷积核的数目为32,也就是输出的维度。卷积的空间维度是3_3,也就是用一个3_3的框框去做卷积,采用relu激活函数。 接下来用了MaxPooling2D 这个二维的池化层,主要用来降维和特征提取。

在连续做了3次CNN之后,使用了一个Flatten层。这个层会把多维数据压扁成一维数据,但是不会影响数据总量也就是batch的大小,从summery里面来看,就是把(None, 1, 1, 128) 压扁成了(None, 128) 。

最后,在fit的时候我们看到了一个新的参数shuffle=True,这个参数其实是默认开启的,不写也可以,指定为True,会在每次训练的时候让训练集随机打乱