目的:

使用requests库以及xpath解析进行实验楼所有课程,存入MySQL数据 库中。

准备工作:

首先安装,requests库,lxml库,以及peewee库。在命令行模式,使用以下命令。

pip install requests

pip install lxml

pip install peewee

然后,就可以打开编辑器编写代码了。

代码:

1 # 引入所需要的库

2 import time

3 import requests

4 from peewee import *

5 from lxml import etree

6 # 这个程序要倒着看

7

8 # 这个是连接数据库的,名字和密码根据自己情况修改

9 db = MySQLDatabase('shiyanlou', user='root', passwd='xxxxxx')

10

11

12 class Course(Model):

13 title = CharField()

14 teacher = CharField()

15 teacher_courses = IntegerField()

16 tag = CharField()

17 study_num = IntegerField()

18 content = CharField()

19

20 class Meta:

21 database = db

22

23

24 Course.create_table()

25

26

27 def parse_content(url, title, tag, study_num):

28 print('课程地址:' + url)

29 res = requests.get(url)

30 xml = etree.HTML(res.text)

31 # 获取页面里的简介

32 try:

33 content = xml.xpath('//meta[@name="description"]/@content')[0]

34 except Exception as e:

35 content = '无'

36 # 获取老师名字

37 try:

38 teacher = xml.xpath(

39 '//div[@class="sidebox mooc-teacher"]//div[@class="mooc-info"]/div[@class="name"]/strong/text()')[0]

40 except Exception as e:

41 teacher = '匿名'

42 # 获取老师发表课程数目

43 try:

44 teacher_courses = xml.xpath(

45 '//div[@class="sidebox mooc-teacher"]//div[@class="mooc-info"]/div[@class="courses"]/strong/text()')[0]

46 except Exception as e:

47 teacher_courses = '未知'

48 # 存入数据库

49 try:

50 course = Course(title=title, teacher=teacher,

51 teacher_courses=int(teacher_courses), tag=tag, study_num=int(study_num), content=content)

52 course.save()

53 except Exception as e:

54 print('一条数据存取失败')

55

56

57 def get_course_link(url):

58 # 获取每一页的信息,传给下一个函数

59 response = requests.get(url)

60 xml = etree.HTML(response.text)

61 # contains()是包含的意思

62 courses = xml.xpath(

63 '//div[contains(@class, "col-md-3") and contains(@class, "col-sm-6") and contains(@class, "course")]')

64 for course in courses:

65 try:

66 url = 'https://www.shiyanlou.com' + course.xpath('.//a/@href')[0]

67 except Exception as e:

68 print('一个课程页面未获得')

69 continue

70 title = course.xpath('.//div[@class="course-name"]/text()')[0]

71 study_people = course.xpath(

72 './/span[@class="course-per-num pull-left"]/text()')[1].strip()

73 # study_people = int(study_people)

74 try:

75 tag = course.xpath(

76 './/span[@class="course-money pull-right"]/text()')[0]

77 except Exception as e:

78 tag = "普通"

79 parse_content(url=url, title=title, tag=tag, study_num=study_people)

80 # time.sleep(0.5)

81

82

83 def main():

84 # 通过requests库的get获得目标地址的返回信息,类型为Response

85 response = requests.get('https://www.shiyanlou.com/courses/')

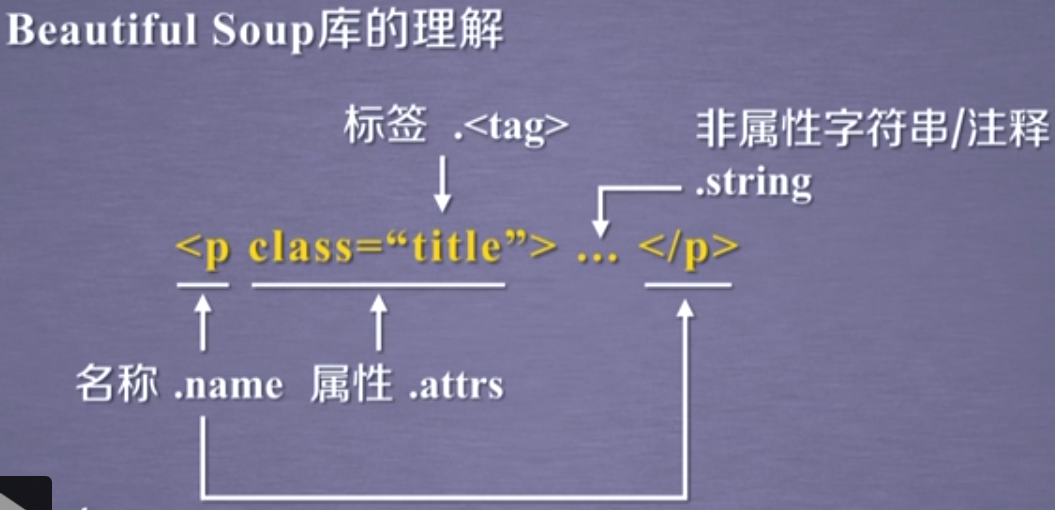

86 # 将返回信息的文本转化为xml树,可以通过xpath来进行查询

87 xml = etree.HTML(response.text)

88 # 由分析网页源代码可以总结,url分页模式,只有最后的数字不一样

89 course_link = 'https://www.shiyanlou.com/courses/?category=all&course_type=all&fee=all&tag=all&page={}'

90 # 这里获得最大页数就可以了,xpath()函数里的便是寻找路径了

91 # //会在全文来进行查找,//ul则是查找全文的ul标签,//ul[@class="pagination"]会仅查找有class属性,

92 # 且为"pagination"的标签,之后/li是查找当前的ul标签下的li标签(仅取一层),取查询到的列表倒数第二个标签

93 # 为li[last()-1],/a/text()查询a标签里的文本内容

94 page = xml.xpath('//ul[@class="pagination"]/li[last()-1]/a/text()')

95 if len(page) != 1:

96 print('爬取最大页数时发生错误!!')

97 return None

98 # page原是一个列表,这里取出它的元素,并转化为Int型

99 page = int(page[0])

100 # 将每一页的url传给get_course_link函数进行处理

101 for i in range(1, page + 1):

102 # 填入course_link,获取完整url

103 url = course_link.format(i)

104 print('页面地址:' + url)

105 # 调用另一个函数

106 get_course_link(url)

107

108

109 if __name__ == '__main__':

110 # 调用main函数

111 main()

112

113 # [Finished in 218.5s]