先放几个必要的依赖吧

配置文件

**spring: ** application: name: redis-caching **datasource: ** driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://127.0.0.1:3306/redis_caching?useSSL=FALSE&serverTimezone=GMT%2B8 username: root password: **** type: com.alibaba.druid.pool.DruidDataSource filters: stat maxActive: 20 initialSize: 1 maxWait: 60000 minIdle: 1 timeBetweenEvictionRunsMillis: 60000 minEvictableIdleTimeMillis: 300000 validationQuery: select 'x' testWhileIdle: true testOnBorrow: false testOnReturn: false poolPreparedStatements: true maxOpenPreparedStatements: 20 **redis: ** host: 127.0.0.1 port: 6379 password: **** timeout: 10000 **lettuce: ** pool: min-idle: 0 max-idle: 8 max-active: 8 max-wait: -1 **server: ** port: 8080 #mybatis **mybatis-plus: ** mapper-locations: classpath*:/mybatis-mappers/* _#实体扫描,多个package用逗号或者分号分隔 _ typeAliasesPackage: com.guanjian.rediscaching.model **global-config: ** _#数据库相关配置 _ **db-config: ** _#主键类型 AUTO:"数据库ID自增", INPUT:"用户输入ID", ID_WORKER:"全局唯一ID (数字类型唯一ID)", UUID:"全局唯一ID UUID"; _ id-type: INPUT logic-delete-value: -1 logic-not-delete-value: 0 banner: false _#原生配置 _ **configuration: ** map-underscore-to-camel-case: true cache-enabled: false call-setters-on-nulls: true jdbc-type-for-null: 'null'

配置类

@Configuration @EnableCaching public class RedisConfig extends CachingConfigurerSupport {

@Value("${spring.redis.host}")

private String host;

@Value("${spring.redis.port}") private int port; @Value("${spring.redis.password}") private String password; @Bean public KeyGenerator wiselyKeyGenerator(){ return new KeyGenerator() { @Override public Object generate(Object target, Method method, Object... params) { StringBuilder sb = new StringBuilder(); sb.append(target.getClass().getName()); sb.append(method.getName()); for (Object obj : params) { sb.append(obj.toString()); } return sb.toString(); } }; }

@Bean

public JedisConnectionFactory redisConnectionFactory() { JedisConnectionFactory factory = new JedisConnectionFactory(); factory.setHostName(host); factory.setPort(port); factory.setPassword(password); return factory; }

@Bean

public CacheManager cacheManager(RedisConnectionFactory factory) { RedisCacheManager cacheManager =RedisCacheManager.create(factory); // Number of seconds before expiration. Defaults to unlimited (0) // cacheManager.setDefaultExpiration(10); //设置key-value超时时间 return cacheManager; }

@Bean

public RedisTemplate<String, String> redisTemplate(RedisConnectionFactory factory) { StringRedisTemplate template = new StringRedisTemplate(factory); setSerializer(template); //设置序列化工具,这样ReportBean不需要实现Serializable接口 template.afterPropertiesSet(); return template; }

private void setSerializer(StringRedisTemplate template) {

Jackson2JsonRedisSerializer jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper om = new ObjectMapper(); om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY); om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL); jackson2JsonRedisSerializer.setObjectMapper(om); template.setValueSerializer(jackson2JsonRedisSerializer); } }

@Configuration public class DruidConfig { @ConfigurationProperties(prefix = "spring.datasource") @Bean @Primary public DataSource dataSource() { return new DruidDataSource(); } }

实体类

@Data @TableName("city") public class City implements Serializable{ @TableId private Integer id; private String name; }

dao

@Mapper public interface CityDao extends BaseMapper

service

public interface CityService extends IService

@Service public class CityServiceImpl extends ServiceImpl<CityDao,City> implements CityService{ }

controller

@RestController public class CityController { @Autowired private CityService cityService; @GetMapping("/findbyid") @Cacheable(cacheNames = "city_info",key = "#id") public City findCityById(@RequestParam("id") int id) { return cityService.getById(id); }

@PostMapping("/save")

@CachePut(cacheNames \= "city\_info",key \= "#city.id")

public City saveCity(@RequestBody City city) {

cityService.save(city);

return city; }

@GetMapping("/deletebyid")

@CacheEvict(cacheNames \= "city\_info",key \= "#id")

public boolean deleteCityById(@RequestParam("id") int id) {

return cityService.removeById(id);

}

@PostMapping("/update")

@CachePut(cacheNames \= "city\_info",key \= "#city.id")

public City updateCity(@RequestBody City city) {

City cityQuery = new City();

cityQuery.setId(city.getId());

QueryWrapper

测试

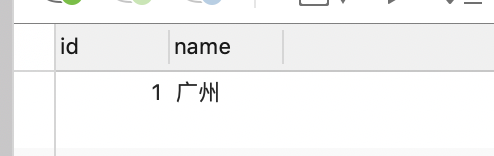

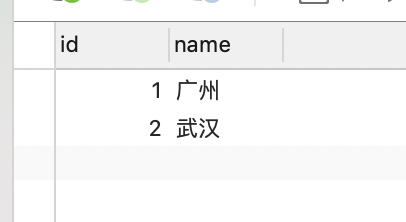

我们在数据库中有一个city的表,其中有一条数据

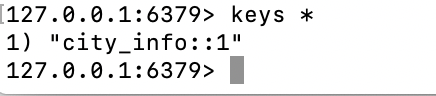

而redis中任何数据都没有

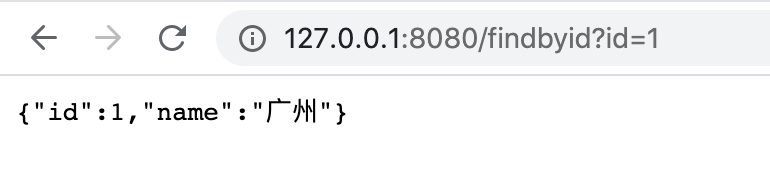

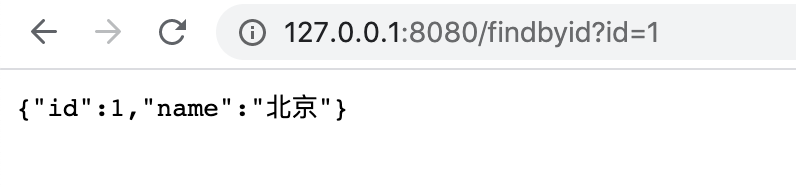

此时我们查询第一个Rest接口

后端日志为

2020-09-30 06:06:12.919 DEBUG 1321 --- [nio-8080-exec-4] c.g.rediscaching.dao.CityDao.selectById : ==> Preparing: SELECT id,name FROM city WHERE id=?

2020-09-30 06:06:12.920 DEBUG 1321 --- [nio-8080-exec-4] c.g.rediscaching.dao.CityDao.selectById : ==> Parameters: 1(Integer)

2020-09-30 06:06:12.945 DEBUG 1321 --- [nio-8080-exec-4] c.g.rediscaching.dao.CityDao.selectById : <== Total: 1

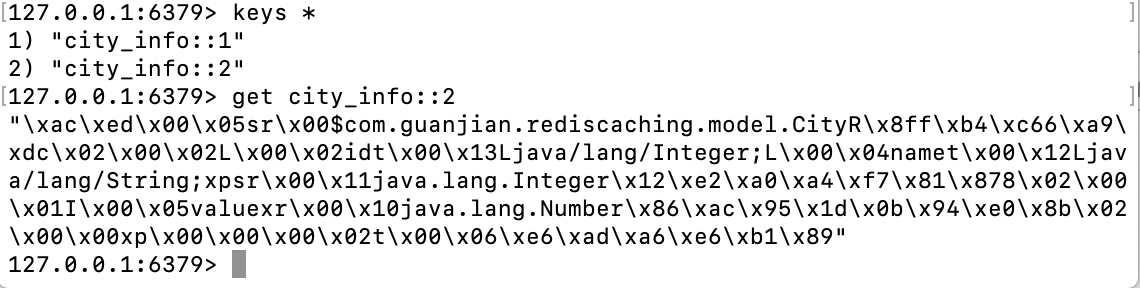

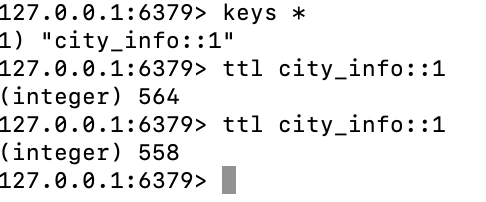

此时我们查询redis中如下

可见我们在没有写任何redis代码的同时,就将数据存储进了redis

此时我们再此查询

则后端日志没有打印SQL语句,说明再次查询是从redis中获取而不是mysql中获取的。

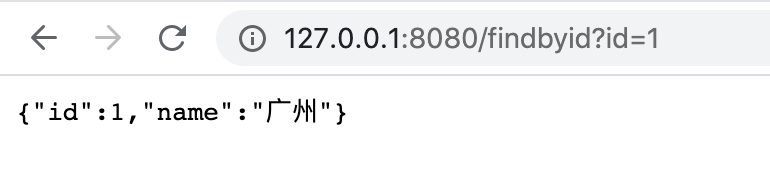

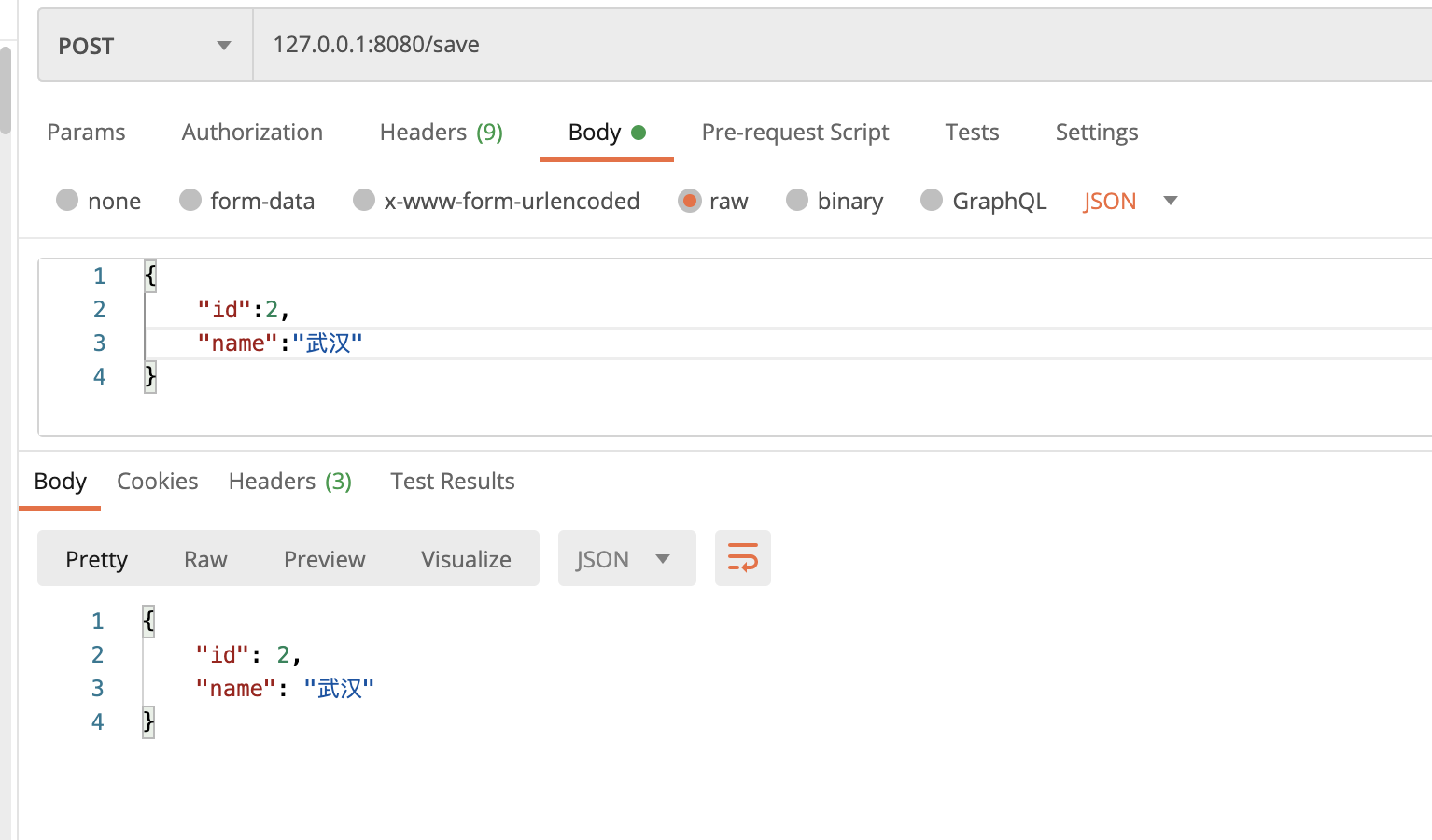

此时我们测试第二个Rest接口

此时数据库中多出一条数据

我们再来看redis中的数据

查询第二条数据可得

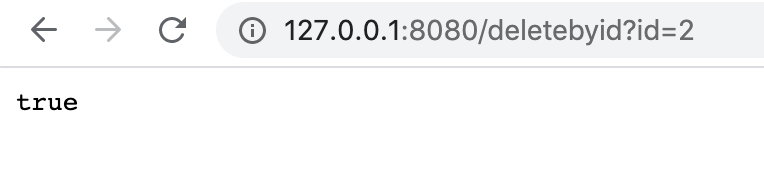

现在我们来删除第二条数据

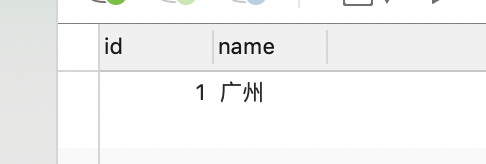

数据库中第二条数据被删除

同时我们在redis中可以看到第二条数据也被删除了

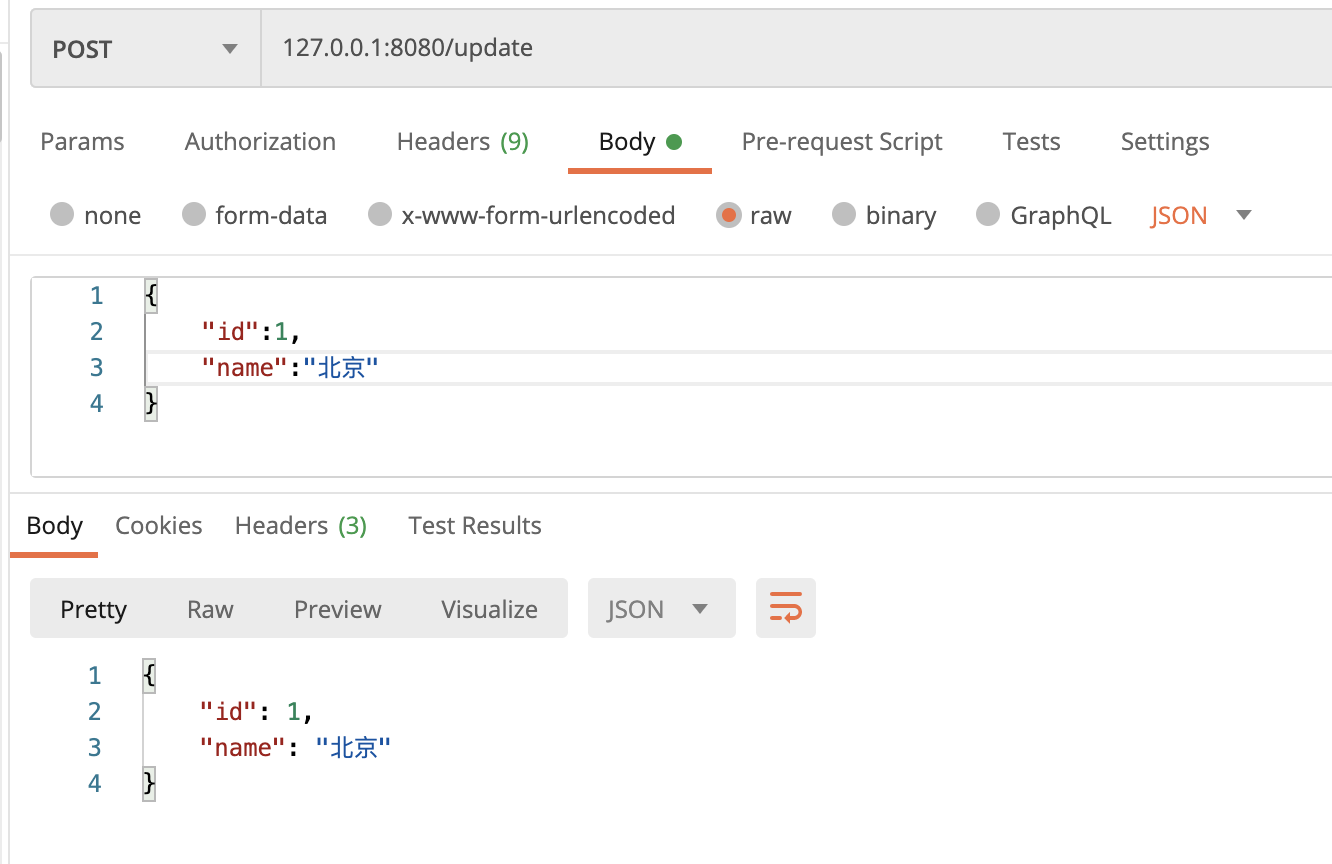

现在我们来修改第一条数据

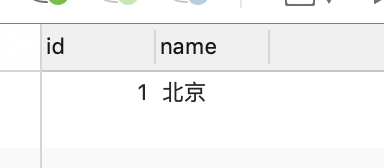

数据库中同时更新了数据

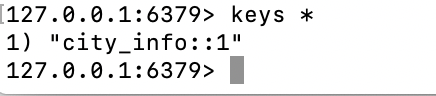

redis中的数据依然存在

此时我们重新查询第一条数据

后端日志中也没有相应的查询SQL语句,之前的日志如下

2020-09-30 06:32:57.729 DEBUG 1349 --- [nio-8080-exec-3] c.g.rediscaching.dao.CityDao.insert : ==> Preparing: INSERT INTO city ( id, name ) VALUES ( ?, ? )

2020-09-30 06:32:57.730 DEBUG 1349 --- [nio-8080-exec-3] c.g.rediscaching.dao.CityDao.insert : ==> Parameters: 2(Integer), 武汉(String)

2020-09-30 06:32:57.735 DEBUG 1349 --- [nio-8080-exec-3] c.g.rediscaching.dao.CityDao.insert : <== Updates: 1

2020-09-30 06:38:04.042 DEBUG 1349 --- [io-8080-exec-10] c.g.rediscaching.dao.CityDao.deleteById : ==> Preparing: DELETE FROM city WHERE id=?

2020-09-30 06:38:04.043 DEBUG 1349 --- [io-8080-exec-10] c.g.rediscaching.dao.CityDao.deleteById : ==> Parameters: 2(Integer)

2020-09-30 06:38:04.047 DEBUG 1349 --- [io-8080-exec-10] c.g.rediscaching.dao.CityDao.deleteById : <== Updates: 1

2020-09-30 06:40:09.723 DEBUG 1349 --- [nio-8080-exec-3] c.g.rediscaching.dao.CityDao.update : ==> Preparing: UPDATE city SET name=? WHERE id=?

2020-09-30 06:40:09.728 DEBUG 1349 --- [nio-8080-exec-3] c.g.rediscaching.dao.CityDao.update : ==> Parameters: 北京(String), 1(Integer)

2020-09-30 06:40:09.733 DEBUG 1349 --- [nio-8080-exec-3] c.g.rediscaching.dao.CityDao.update : <== Updates: 1

现在我们来给缓存设置过期时间

@Configuration @EnableCaching public class RedisConfig extends CachingConfigurerSupport {

@Value("${spring.redis.host}")

private String host;

@Value("${spring.redis.port}") private int port; @Value("${spring.redis.password}") private String password; @Bean public KeyGenerator wiselyKeyGenerator(){ return new KeyGenerator() { @Override public Object generate(Object target, Method method, Object... params) { StringBuilder sb = new StringBuilder(); sb.append(target.getClass().getName()); sb.append(method.getName()); for (Object obj : params) { sb.append(obj.toString()); } return sb.toString(); } }; }

@Bean

public JedisConnectionFactory redisConnectionFactory() { JedisConnectionFactory factory = new JedisConnectionFactory(); factory.setHostName(host); factory.setPort(port); factory.setPassword(password); return factory; }

@Bean

public CacheManager cacheManager(RedisConnectionFactory factory) { Random random = new Random(); return new RedisCacheManager( RedisCacheWriter.nonLockingRedisCacheWriter(factory), //未设置过期策略的在20分钟内过期 getRedisCacheConfigurationWithTtl(1140 + random.nextInt(60)), // 指定 key 策略 getRedisCacheConfigurationMap() ); }

@Bean

public RedisTemplate<String, String> redisTemplate(RedisConnectionFactory factory) { StringRedisTemplate template = new StringRedisTemplate(factory); setSerializer(template); //设置序列化工具,这样ReportBean不需要实现Serializable接口 template.afterPropertiesSet(); return template; }

private void setSerializer(StringRedisTemplate template) {

Jackson2JsonRedisSerializer jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper om = new ObjectMapper(); om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY); om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL); jackson2JsonRedisSerializer.setObjectMapper(om); template.setValueSerializer(jackson2JsonRedisSerializer); }

private Map<String, RedisCacheConfiguration> getRedisCacheConfigurationMap() {

Map<String, RedisCacheConfiguration> redisCacheConfigurationMap = new ConcurrentHashMap<>();

Random random = new Random(); redisCacheConfigurationMap.put("city_info", getRedisCacheConfigurationWithTtl(540 + random.nextInt(60))); return redisCacheConfigurationMap; }

private RedisCacheConfiguration getRedisCacheConfigurationWithTtl(Integer seconds) {

Jackson2JsonRedisSerializer<Object> jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer<>(Object.class);

ObjectMapper om = new ObjectMapper(); om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY); om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL); jackson2JsonRedisSerializer.setObjectMapper(om); RedisCacheConfiguration redisCacheConfiguration = RedisCacheConfiguration.defaultCacheConfig(); redisCacheConfiguration = redisCacheConfiguration.serializeValuesWith( RedisSerializationContext .SerializationPair .fromSerializer(jackson2JsonRedisSerializer) ).entryTtl(Duration.ofSeconds(seconds)); return redisCacheConfiguration; } }

通过查看redis的键的过期时间,我们可以看到

它是用的指定键的过期时间

此时我们调整RedisConfig的内容,将指定的city_info改掉

private Map<String, RedisCacheConfiguration> getRedisCacheConfigurationMap() { Map<String, RedisCacheConfiguration> redisCacheConfigurationMap = new ConcurrentHashMap<>(); Random random = new Random(); redisCacheConfigurationMap.put("abcd", getRedisCacheConfigurationWithTtl(540 + random.nextInt(60))); return redisCacheConfigurationMap; }

此时我们会使用默认的20分钟过期时间

此时我们可以看到,它使用的就是默认所有键都相同的20分钟过期时间。

现在我们来增加防止缓存高并发的功能

缓存高并发的一般性原则可以参考建立缓存,防高并发代码demo

现在我们要通过标签来完成这个功能,新增一个标签

@Target({ ElementType.METHOD }) @Retention(RetentionPolicy.RUNTIME) public @interface Lock { }

新增一个Redis工具类,包含了分布式锁的实现

@Component public class RedisUtils { @Autowired private RedisTemplate redisTemplate; private static final Long RELEASE_SUCCESS = 1L; private static final String UNLOCK_LUA = "if redis.call('get', KEYS[1]) == ARGV[1] then return redis.call('del', KEYS[1]) else return 0 end"; _/** _ * 写入缓存 */ public boolean set(final String key, Object value) { boolean result = false; try { ValueOperations<Serializable, Object> operations = redisTemplate.opsForValue(); operations.set(key, value); result = true; } catch (Exception e) { e.printStackTrace(); } return result; } _/** _ * 写入缓存设置时效时间 */ public boolean set(final String key, Object value, Long expireTime , TimeUnit timeUnit) { boolean result = false; try { ValueOperations<Serializable, Object> operations = redisTemplate.opsForValue(); operations.set(key, value); redisTemplate.expire(key, expireTime, timeUnit); result = true; } catch (Exception e) { e.printStackTrace(); } return result; }

_/\*\*

_ * 写入缓存设置时效时间,仅第一次有效 * @param _key

_ * @param _value

_ * @param _expireTime

_ * @param _timeUnit

_ * _@return

_ _*/

_ public boolean setIfAbsent(final String key, Object value, Long expireTime , TimeUnit timeUnit) {

boolean result = false;

try {

ValueOperations<Serializable, Object> operations = redisTemplate.opsForValue();

operations.setIfAbsent(key,value,expireTime,timeUnit);

result = true;

} catch (Exception e) {

e.printStackTrace();

}

return result;

}

_/**

_ * 批量删除对应的value */ public void remove(final String... keys) {

for (String key : keys) {

remove(key);

}

}

_/**

_ * 批量删除key */ public void removePattern(final String pattern) {

Set

_/\*\*

_ * 尝试获取锁 立即返回 * * @param _key _ * @param _value _ * @param _timeout _ * _@return _ _*/ _ public boolean lock(String key, String value, long timeout) { return setIfAbsent(key,value,timeout,TimeUnit.MILLISECONDS); }

_/\*\*

_ * 以阻塞方式的获取锁 * * @param _key _ * @param _value _ * @param _timeout _ * _@return _ _*/ _ public boolean lockBlock(String key, String value, long timeout) { long start = System.currentTimeMillis(); while (true) { //检测是否超时 if (System.currentTimeMillis() - start > timeout) { return false; } //执行set命令 //1 Boolean absent = setIfAbsent(key,value,timeout,TimeUnit.MILLISECONDS); //其实没必要判NULL,这里是为了程序的严谨而加的逻辑 if (absent == null) { return false; } //是否成功获取锁 if (absent) { return true; } } }

_/\*\*

_ * 解锁 * @param _key

_ * @param _value

_ * _@return

_ _*/

_ public boolean unlock(String key, String value) {

RedisScript

实现一个AOP,用于拦截缓存过期高并发

_/**

_ * aop实现拦截缓存过期时的高并发 * * @author _关键

_ */ @Aspect @Component public class LockAop {

@Autowired

private RedisUtils redisUtils;

@Around(value = "@annotation(com.guanjian.rediscaching.annotation.Lock)")

public Object lock(ProceedingJoinPoint joinPoint) throws Throwable {

MethodSignature methodSignature = (MethodSignature) joinPoint.getSignature();

Cacheable cacheableAnnotion = methodSignature.getMethod().getDeclaredAnnotation(Cacheable.class);

String[] cacheNames = cacheableAnnotion.cacheNames();

String idKey = cacheableAnnotion.key();

String[] paramNames = methodSignature.getParameterNames();

if (paramNames != null && paramNames.length > 0) {

Object[] args = joinPoint.getArgs();

Map<String,Object> params = new HashMap<>();

for (int i = 0; i < paramNames.length; i++) {

params.put(paramNames[i],args[i]);

}

idKey = idKey.substring(1);

String key = cacheNames[0] + "::" + params.get(idKey).toString();

if (!redisUtils.exists(key)) {

if (redisUtils.lock(key + "lock","id" + params.get(idKey).toString(),3000)) {

Object res = joinPoint.proceed();

try {

return res;

} finally {

redisUtils.unlock(key + "lock","id" + params.get(idKey).toString());

}

}else {

LocalDateTime now = LocalDateTime._now_();

Future

最后将标签添加到查询方法上面

@RestController public class CityController { @Autowired private CityService cityService; @GetMapping("/findbyid") @Cacheable(cacheNames = "city_info",key = "#id") @Lock public City findCityById(@RequestParam("id") int id) { return cityService.getById(id); }

@PostMapping("/save")

@CachePut(cacheNames \= "city\_info",key \= "#city.id")

public City saveCity(@RequestBody City city) {

cityService.save(city);

return city; }

@GetMapping("/deletebyid")

@CacheEvict(cacheNames \= "city\_info",key \= "#id")

public boolean deleteCityById(@RequestParam("id") int id) {

return cityService.removeById(id);

}

@PostMapping("/update")

@CachePut(cacheNames \= "city\_info",key \= "#city.id")

public City updateCity(@RequestBody City city) {

City cityQuery = new City();

cityQuery.setId(city.getId());

QueryWrapper

现在我们来增加布隆过滤器来防治恶意无效访问

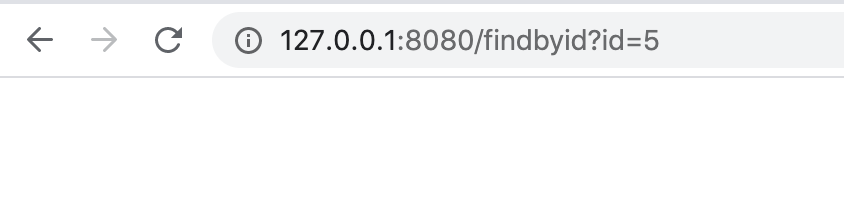

在该缓存系统中存在一个问题,那就是当用户查询了数据库中不存在的id的时候,缓存系统依然会将空值添加到redis中。如果有恶意用户通过工具不断使用不存在的id进行访问的时候,一方面会对数据库造成巨大的访问压力,另一方面可能会把redis内存撑破。

比方说我们访问一个不存在的id=5的时候

Redis依然会被写入,查出来是NullValue

代码实现(请注意,该实现依然存在漏洞,但是可以杜绝大部分的恶意访问)

先写一个标签

@Target({ ElementType.METHOD }) @Retention(RetentionPolicy.RUNTIME) public @interface Bloom { }

在RedisConfig中添加一个布隆过滤器的Bean

@Bean public BloomFilter

建立一个任务调度器,每一分钟获取一次数据库中的id值写入布隆过滤器中

@Component public class BloomFilterScheduler {

private ScheduledExecutorService scheduledExecutorService = Executors.newSingleThreadScheduledExecutor();

@Autowired

private BloomFilter

private ScheduledFuture scheduleTask(Runnable task) {

return scheduledExecutorService.scheduleAtFixedRate(task,0,1, TimeUnit._MINUTES_);

}

@PostConstruct

public ScheduledFuture scheduleChange() { return scheduleTask(this::getAllCityForBloomFilter); } }

再编写一个布隆过滤器的AOP拦截,如果布隆过滤器中不存在该key,则不允许访问数据库,也不允许建立缓存。

@Aspect @Component public class BloomFilterAop {

@Autowired

private BloomFilter

最后是Controller,打上该标签。这里需要注意的是,当我们查询出来的对象为null的时候抛出异常,这样可以避免在Redis中建立缓存。这里保存对象的时候会把该对象的id写入布隆过滤器中,但由于可能存在不同的集群节点,所以会出现集群各节点的布隆过滤器数据不一致的问题,但每一分钟都会去检索数据库,所以每分钟之后,各个节点的布隆过滤器的数据会再次同步,当然我们会考虑更好的数据一致性处理方式。

@RestController public class CityController {

@Autowired

private CityService cityService;

@Autowired

private BloomFilter

@PostMapping("/save")

@CachePut(cacheNames \= "city\_info",key \= "#city.id")

public City saveCity(@RequestBody City city) {

if (cityService.save(city)) {

bloomFilter.put("city\_info::" \+ city.getId());

return city; } throw new IllegalArgumentException("保存失败"); }

@GetMapping("/deletebyid")

@CacheEvict(cacheNames \= "city\_info",key \= "#id")

public boolean deleteCityById(@RequestParam("id") int id) {

return cityService.removeById(id);

}

@PostMapping("/update")

@CachePut(cacheNames \= "city\_info",key \= "#city.id")

public City updateCity(@RequestBody City city) {

City cityQuery = new City();

cityQuery.setId(city.getId());

QueryWrapper

添加布隆过滤器的分布式节点的同步模式

增加Hazelcast的配置,有关Hazelcast的内容,请参考JVM内存级分布式缓存Hazelcast

@Configuration public class HazelcastConfiguration { @Bean public Config hazelCastConfig() { Config config = new Config(); config.setInstanceName("hazelcast-instance").addMapConfig( new MapConfig().setName("configuration").setMaxSizeConfig(new MaxSizeConfig(200, MaxSizeConfig.MaxSizePolicy.FREE_HEAP_SIZE)).setEvictionPolicy(EvictionPolicy.LFU) .setTimeToLiveSeconds(-1)); return config; }

@Bean

public HazelcastInstance instance() { return Hazelcast.newHazelcastInstance(); }

@Bean

public Map<Integer,BloomFilter

修改布隆过滤器AOP

@Aspect @Component public class BloomFilterAop {

@Autowired

private Map<Integer,BloomFilter

调度器改为只运行一次

@Component public class BloomFilterScheduler {

@Autowired

private BloomFilter

最后是Controller

@RestController public class CityController {

@Autowired

private CityService cityService;

@Autowired

private BloomFilter

@PostMapping("/save")

@CachePut(cacheNames \= "city\_info",key \= "#city.id")

public City saveCity(@RequestBody City city) {

if (cityService.save(city)) {

CompletableFuture._runAsync_(() -> {

bloomFilter.put("city\_info::" \+ city.getId());

bloomFilters.put(1,bloomFilter); }); return city; } throw new IllegalArgumentException("保存失败"); }

@GetMapping("/deletebyid")

@CacheEvict(cacheNames \= "city\_info",key \= "#id")

public boolean deleteCityById(@RequestParam("id") int id) {

return cityService.removeById(id);

}

@PostMapping("/update")

@CachePut(cacheNames \= "city\_info",key \= "#city.id")

public City updateCity(@RequestBody City city) {

City cityQuery = new City();

cityQuery.setId(city.getId());

QueryWrapper