ArrayList

Vector

CopyOnWriteArrayList

LinkedList

HashMap

ConcurrentHashMap

LinkedHashMap

LinkedBlockingQueue

PriorityQueue

使用场景

随机访问

ArrayList的线程安全版

读多写少,写加锁,写操作在复制的数组上进行,会导致数据不一致,存在

添加删除更快

映射

线程安全的HashMap

保证插入次序或者LRU次序的HashMap

链表实现的阻塞队列

优先队列,排序

底层实现

数组

数组

数组

双向链表

数组+链表/红黑树(链表长度大于8时)

数组+链表/红黑树

数组+链表/红黑树,另外维护了一个双向链表

双向链表

堆

扩容

1.5倍

2倍

每次add操作都会将数组拷贝到len+1长的新数组中

/

2倍(rehash)

2倍

线程安全

否,可以使用Collections.synchronizedList()得到线程安全的List

是(synchronized)

是(ReentrantLock)

否

否

是(分段表/CAS+synchronized(jdk1.8之后))

否

是(ReentrantLock)

否

Fail-Fast

是

是

否

是

是

否

是

1.Fail-Fast使用 protected transient int modCount = 0; 支持,modCount记录容器结构化修改次数。在操作的前后判断modCount是否改变,若改变则认为序列化或者使用迭代其期间容器被结构化修改,则抛出异常。

2.HashMap中使用的技巧:

- 求hash值:将key的hash值的高16位和低16位进行与运算,降低哈希碰撞

- 取模:y%x => y&(x-1)

- 扩容rehash计算桶下标:原容量的二进制位为0则位置不变,为1则位置+oldCap

- 当用户传入制定容量时,找到大于等于容量的最近的二进制数

一、ArrayList

public class ArrayList<E> extends AbstractList<E>

implements List<E>, RandomAccess, Cloneable, java.io.Serializable

ArrayList主要实现了List、RandomAccess接口。

ArrayList是List接口的可变大小数组的实现。实现所有可选链表操作,允许所有元素,包括null。除了实现了List接口之外,这个类还提供了操作用来内部存储链表的数组大小的方法(这个类大致等同于Vector,除了他是异步的以外)。

size()、isEmpty()、get()、set()、iterator()、listIterator()操作以恒定的时间运行。add()操作以O(n)的时间复杂度运行,其他的所有操作则以线性的时间运行(粗略的说)。常量因子相比与LinkedList较低。

每个ArrayList实例有一个capacity(容量)。capacity是用来存储链表元素的数组的大小。它总是至少和list大小一样大。当元素被加入到ArrayList后,它的capacity会自动扩容。除了增加一个元素有恒定的摊销时间成本之外,增长策略的细节未被指定。

在添加大量的元素之前,一个应用可以使用ensureCapacity()操作提高一个实例的capacity。这可以减少增量重分配的数量(This may reduce the amount of incremental reallocation)。

要注意的是ArrayList不是同步的。如果多线程并发的访问一个ArrayList实例,至少有一个线程会修改链表结构,必须在它的外部进行异步控制。(一个结构化的修改是指增加或删除一个或多个元素的操作,或者显式改变底层数组的大小;仅仅设置一个元素的值不是一个结构化的修改。)一个典型的实现是通过对某个对象的同步来自然封装这个链表。(This is typically accomplished by synchronizing on some object that naturally encapsulates the list.)

如果没有这种对象,这个链表则需要用Collections.synchronizedList()方法来封装。这最好是在创建的时候完成,以防止偶然的对链表的异步访问。

List list = Collections.synchronizedList(new ArrayList(...));

类的iterator()和listIterator(int)方法返回的迭代器都是fail-fast的:如果迭代器创建之后,链表在任意时刻被结构化的修改,除了迭代器自己的remove()、add()方法(迭代器自己的add()、remove()方法会将expectedModCount=modCount,因此不会触发异常,具体见方法分析),迭代器将抛出ConcurrentModificationException异常。因此,面对并发修改,迭代器将快速失败并清空,而不是在未来的不确定的时刻冒着任意的风险以及不确定的行为。

注意,迭代器的fail-fast行为不能被保证一定发生,通常来说,当异步并发修改发生时,不可能做出任何硬性保证。Fail-fast迭代器基于最大努力抛出ConcurrentModificationException异常。因此,这样的做法的是错的,写一个程序,依赖于这个异常来保证程序的正确性:迭代器的fail-fast行为应该只被用来检查bug(个人理解是如果发生并发修改,并不一定保证抛出ConcurrentModificationException异常,因此程序的正确性不应该依赖此异常)。

1.默认容量capacity

/**

* Default initial capacity. */

private static final int DEFAULT_CAPACITY = 10;

2.存储ArrayList元素的数组elementData

/**

* The array buffer into which the elements of the ArrayList are stored.

* The capacity of the ArrayList is the length of this array buffer. Any

* empty ArrayList with elementData == DEFAULTCAPACITY_EMPTY_ELEMENTDATA

* will be expanded to DEFAULT_CAPACITY when the first element is added.

*/

transient Object[] elementData; // non-private to simplify nested class access

ArrayList的容量就是数组的长度。

任何elementData等于DEFAULTCAPACITY_EMPTY_ELEMENTDATA的空ArrayList,当其第一个元素被添加进来时,elementData的长度将被扩展为DEFAULT_CAPACITY。

3.ArrayList包含元素的数量size

/**

* The size of the ArrayList (the number of elements it contains).

*

* @serial */

private int size;

4.构造器

ArrayList包含三种构造器:

1.ArrayList():将elementData初始化为空数组DEFAULTCAPACITY_EMPTY_ELEMENTDATA。

2.ArrayList(int initialCapacity):将element初始化为容量为initialCapacity的数组,当initialCapacity为0是,初始化为EMPTY_ELEMENTDATA

3.ArrayList(Collection<? extends E> c):使用集合来初始化elementData,如果集合的length为0,则初始化为EMPTY_ELEMENTDATA。

/**

* Constructs an empty list with the specified initial capacity.

*

* @param initialCapacity the initial capacity of the list

* @throws IllegalArgumentException if the specified initial capacity

* is negative

*/

public ArrayList(int initialCapacity) {

if (initialCapacity > 0) {

this.elementData = new Object[initialCapacity];

} else if (initialCapacity == 0) {

this.elementData = EMPTY_ELEMENTDATA;

} else {

throw new IllegalArgumentException("Illegal Capacity: "+

initialCapacity);

}

}

/**

* Constructs an empty list with an initial capacity of ten.

*/

public ArrayList() {

this.elementData = DEFAULTCAPACITY_EMPTY_ELEMENTDATA;

}

/**

* Constructs a list containing the elements of the specified

* collection, in the order they are returned by the collection's

* iterator.

*

* @param c the collection whose elements are to be placed into this list

* @throws NullPointerException if the specified collection is null

*/

public ArrayList(Collection<? extends E> c) {

elementData = c.toArray();

if ((size = elementData.length) != 0) {

// defend against c.toArray (incorrectly) not returning Object[]

// (see e.g. https://bugs.openjdk.java.net/browse/JDK-6260652)

if (elementData.getClass() != Object[].class)

elementData = Arrays.copyOf(elementData, size, Object[].class);

} else {

// replace with empty array.

this.elementData = EMPTY_ELEMENTDATA;

}

}

5.Fail-fast与结构化修改

ArrayList继承于AbstractList,AbstractList中的域modCount用来统计结构化修改的次数。

结构化修改是指改变链表的大小,或者其他扰乱它的方式,可能导致正在进行的迭代可能产生不正确的结果。

/**

* The number of times this list has been <i>structurally modified</i>.

* Structural modifications are those that change the size of the

* list, or otherwise perturb it in such a fashion that iterations in

* progress may yield incorrect results.

*

* <p>This field is used by the iterator and list iterator implementation

* returned by the {@code iterator} and {@code listIterator} methods.

* If the value of this field changes unexpectedly, the iterator (or list

* iterator) will throw a {@code ConcurrentModificationException} in

* response to the {@code next}, {@code remove}, {@code previous},

* {@code set} or {@code add} operations. This provides

* <i>fail-fast</i> behavior, rather than non-deterministic behavior in

* the face of concurrent modification during iteration.

*

* <p><b>Use of this field by subclasses is optional.</b> If a subclass

* wishes to provide fail-fast iterators (and list iterators), then it

* merely has to increment this field in its {@code add(int, E)} and

* {@code remove(int)} methods (and any other methods that it overrides

* that result in structural modifications to the list). A single call to

* {@code add(int, E)} or {@code remove(int)} must add no more than

* one to this field, or the iterators (and list iterators) will throw

* bogus {@code ConcurrentModificationExceptions}. If an implementation

* does not wish to provide fail-fast iterators, this field may be

* ignored.

*/

protected transient int modCount = 0;

View Code

在序列化以及迭代(forEach)等操作的前后,需要记录modCount的值进行对比,如果不相等,则抛出ConcurrentModificationException异常。

/**

* Saves the state of the {@code ArrayList} instance to a stream

* (that is, serializes it).

*

* @param s the stream

* @throws java.io.IOException if an I/O error occurs

* @serialData The length of the array backing the {@code ArrayList}

* instance is emitted (int), followed by all of its elements

* (each an {@code Object}) in the proper order.

*/

private void writeObject(java.io.ObjectOutputStream s)

throws java.io.IOException {

// Write out element count, and any hidden stuff

int expectedModCount = modCount;

s.defaultWriteObject();

// Write out size as capacity for behavioral compatibility with clone()

s.writeInt(size);

// Write out all elements in the proper order.

for (int i=0; i<size; i++) {

s.writeObject(elementData[i]);

}

if (modCount != expectedModCount) {

throw new ConcurrentModificationException();

}

}

/**

* @throws NullPointerException {@inheritDoc}

*/

@Override

public void forEach(Consumer<? super E> action) {

Objects.requireNonNull(action);

final int expectedModCount = modCount;

final Object[] es = elementData;

final int size = this.size;

for (int i = 0; modCount == expectedModCount && i < size; i++)

action.accept(elementAt(es, i));

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

}

6.重要的方法

- public void ensureCapacity(int minCapacity)

参数minCapacity是要扩容的最小大小,要进行扩容必须满足:

1.minCapacity大于现在elementData的长度。

2.不满足(elementData为默认空数组&&要扩容的最小大小小于DEFAULT_CAPACITY

/**

* Increases the capacity of this {@code ArrayList} instance, if

* necessary, to ensure that it can hold at least the number of elements

* specified by the minimum capacity argument.

*

* @param minCapacity the desired minimum capacity

*/

public void ensureCapacity(int minCapacity) {

if (minCapacity > elementData.length

&& !(elementData == DEFAULTCAPACITY_EMPTY_ELEMENTDATA

&& minCapacity <= DEFAULT_CAPACITY)) {

modCount++;

grow(minCapacity);

}

}

- private int newCapacity(int minCapacity)

返回至少和给定minCapacity一样大小的容量。

如果可以的话将返回elementData大小的1.5倍。

除非给定minCapacity大于MAX_ARRAY_SIZE,否则容量不应超过MAX_ARRAY_SIZE。

/**

* Returns a capacity at least as large as the given minimum capacity.

* Returns the current capacity increased by 50% if that suffices.

* Will not return a capacity greater than MAX_ARRAY_SIZE unless

* the given minimum capacity is greater than MAX_ARRAY_SIZE.

*

* @param minCapacity the desired minimum capacity

* @throws OutOfMemoryError if minCapacity is less than zero

*/

private int newCapacity(int minCapacity) {

// overflow-conscious code

int oldCapacity = elementData.length;

int newCapacity = oldCapacity + (oldCapacity >> 1);

if (newCapacity - minCapacity <= 0) {

if (elementData == DEFAULTCAPACITY_EMPTY_ELEMENTDATA) //之前介绍elementData的时候说到,elementData为DEFAULTCAPACITY_EMPTY_ELEMENTDATA //的空ArrayList,当第一次添加元素时,容量被扩充为DEFAULT_CAPACITY

return Math.max(DEFAULT_CAPACITY, minCapacity);

if (minCapacity < 0) // overflow

throw new OutOfMemoryError();

return minCapacity;

}

return (newCapacity - MAX_ARRAY_SIZE <= 0)

? newCapacity

: hugeCapacity(minCapacity);

}

private Object[] grow(int minCapacity)

private Object[] grow()

/** * Increases the capacity to ensure that it can hold at least the * number of elements specified by the minimum capacity argument. * * @param minCapacity the desired minimum capacity * @throws OutOfMemoryError if minCapacity is less than zero */ private Object[] grow(int minCapacity) { return elementData = Arrays.copyOf(elementData, newCapacity(minCapacity)); } private Object[] grow() { return grow(size + 1); }

private void add(E e, Object[] elementData, int s)

public boolean add(E e)

public add(int index, E element)

add(E e)执行时,根据调用关系,当要进行扩容时,最终会调用newCapacity(),容量为扩充为element.length*1.5和minCapacity中的较大者(通常情况是这样,具体参见代码newCapacity())。

add(int index, E element)执行时,如需进行扩容会先进行扩容,然后通过System.arraycopy进行移位,再将元素插入指定位置。

/**

* This helper method split out from add(E) to keep method

* bytecode size under 35 (the -XX:MaxInlineSize default value),

* which helps when add(E) is called in a C1-compiled loop.

*/

private void add(E e, Object[] elementData, int s) {

if (s == elementData.length)

elementData = grow();

elementData[s] = e;

size = s + 1;

}

/**

* Appends the specified element to the end of this list.

*

* @param e element to be appended to this list

* @return {@code true} (as specified by {@link Collection#add})

*/

public boolean add(E e) {

modCount++;

add(e, elementData, size);

return true;

}

/**

* Inserts the specified element at the specified position in this

* list. Shifts the element currently at that position (if any) and

* any subsequent elements to the right (adds one to their indices).

*

* @param index index at which the specified element is to be inserted

* @param element element to be inserted

* @throws IndexOutOfBoundsException {@inheritDoc}

*/

public void add(int index, E element) {

rangeCheckForAdd(index);

modCount++;

final int s;

Object[] elementData;

if ((s = size) == (elementData = this.elementData).length)

elementData = grow();

System.arraycopy(elementData, index,

elementData, index + 1,

s - index);

elementData[index] = element;

size = s + 1;

}

- public E remove(int index)

- public boolean remove(Object o)

- private void fastRemove(Object[] es, int i)

remove(int index)主要调用了fastRemove()来进行删除,fastRemove()用的也是System.arraycopy()。

remove(Object o)先找到该对象的索引,然后调用fastRemove()。

/**

* Removes the element at the specified position in this list.

* Shifts any subsequent elements to the left (subtracts one from their

* indices).

*

* @param index the index of the element to be removed

* @return the element that was removed from the list

* @throws IndexOutOfBoundsException {@inheritDoc}

*/

public E remove(int index) {

Objects.checkIndex(index, size);

final Object[] es = elementData;

@SuppressWarnings("unchecked") E oldValue = (E) es[index];

fastRemove(es, index);

return oldValue;

}

/**

* Removes the first occurrence of the specified element from this list,

* if it is present. If the list does not contain the element, it is

* unchanged. More formally, removes the element with the lowest index

* {@code i} such that

* {@code Objects.equals(o, get(i))}

* (if such an element exists). Returns {@code true} if this list

* contained the specified element (or equivalently, if this list

* changed as a result of the call).

*

* @param o element to be removed from this list, if present

* @return {@code true} if this list contained the specified element

*/

public boolean remove(Object o) {

final Object[] es = elementData;

final int size = this.size;

int i = 0;

found: {

if (o == null) {

for (; i < size; i++)

if (es[i] == null)

break found;

} else {

for (; i < size; i++)

if (o.equals(es[i]))

break found;

}

return false;

}

fastRemove(es, i);

return true;

}

/**

* Private remove method that skips bounds checking and does not

* return the value removed.

*/

private void fastRemove(Object[] es, int i) {

modCount++;

final int newSize;

if ((newSize = size - 1) > i)

System.arraycopy(es, i + 1, es, i, newSize - i);

es[size = newSize] = null;

}

迭代器的add()、remove(),通过对expectedModCount的同步,使得迭代器本身对链表的修改不会触发异常。

private class Itr implements Iterator

{ ... public void remove() { if (lastRet < 0) throw new IllegalStateException(); checkForComodification(); try { ArrayList.this.remove(lastRet); cursor = lastRet; lastRet = -1; expectedModCount = modCount; } catch (IndexOutOfBoundsException ex) { throw new ConcurrentModificationException(); } } ... } private class ListItr extends Itr implements ListIterator { ... public void add(E e) { checkForComodification(); try { int i = cursor; ArrayList.this.add(i, e); cursor = i + 1; lastRet = -1; expectedModCount = modCount; } catch (IndexOutOfBoundsException ex) { throw new ConcurrentModificationException(); } } ... }

7.序列化

ArrayList 基于数组实现,并且具有动态扩容特性,因此保存元素的数组不一定都会被使用,那么就没必要全部进行序列化。

可以发现,elementData被transient修饰,不会被序列化。

/**

* Saves the state of the {@code ArrayList} instance to a stream

* (that is, serializes it).

*

* @param s the stream

* @throws java.io.IOException if an I/O error occurs

* @serialData The length of the array backing the {@code ArrayList}

* instance is emitted (int), followed by all of its elements

* (each an {@code Object}) in the proper order.

*/

private void writeObject(java.io.ObjectOutputStream s)

throws java.io.IOException {

// Write out element count, and any hidden stuff

int expectedModCount = modCount;

s.defaultWriteObject();

// Write out size as capacity for behavioral compatibility with clone()

s.writeInt(size);

// Write out all elements in the proper order.

for (int i=0; i<size; i++) {

s.writeObject(elementData[i]);

}

if (modCount != expectedModCount) {

throw new ConcurrentModificationException();

}

}

/**

* Reconstitutes the {@code ArrayList} instance from a stream (that is,

* deserializes it).

* @param s the stream

* @throws ClassNotFoundException if the class of a serialized object

* could not be found

* @throws java.io.IOException if an I/O error occurs

*/

private void readObject(java.io.ObjectInputStream s)

throws java.io.IOException, ClassNotFoundException {

// Read in size, and any hidden stuff

s.defaultReadObject();

// Read in capacity

s.readInt(); // ignored

if (size > 0) {

// like clone(), allocate array based upon size not capacity

SharedSecrets.getJavaObjectInputStreamAccess().checkArray(s, Object[].class, size);

Object[] elements = new Object[size];

// Read in all elements in the proper order.

for (int i = 0; i < size; i++) {

elements[i] = s.readObject();

}

elementData = elements;

} else if (size == 0) {

elementData = EMPTY_ELEMENTDATA;

} else {

throw new java.io.InvalidObjectException("Invalid size: " + size);

}

}

二、Vector

public class Vector<E>

extends AbstractList<E>

implements List<E>, RandomAccess, Cloneable, java.io.Serializable

Vector主要实现了List、RandomAccess接口。

Vector类实现了一个对象的可增长数组。如同一个数组,Vector包含可以使用整数索引访问的component。然而,Vector被创建出来之后,其大小可以根据增加或者移除item来增长或者缩减。

每个Vector通过维持capacity和capacityIncrement来试图优化存储管理。capacity总是至少和Vector的size一样大;通常,capacity会更大,因为当元素添加进Vector的时候,Vector的存储空间会增长capacityIncrement的大小。在插入大量元素以前,应用会增长Vector的capacity;Vector减少了增长重分配的数量。

1.重要的域

elementData:存储Vector组件(以下翻译成元素)的数组elementData,最后一个元素之后的数组元素为null

/** * The array buffer into which the components of the vector are * stored. The capacity of the vector is the length of this array buffer, * and is at least large enough to contain all the vector's elements. * *

Any array elements following the last element in the Vector are null. * * @serial */ protected Object[] elementData;

elementCount:Vector对象合法元素的个数

/** * The number of valid components in this {@code Vector} object. * Components {@code elementData[0]} through * {@code elementData[elementCount-1]} are the actual items. * * @serial */ protected int elementCount;

capacityIncrement:当元素数量超过capacity时,capacity会自动增长capacityIncrement的大小。如果capacityIncrement小于等于0,capacit则y在每次需要增长时会扩大为两倍。

/** * The amount by which the capacity of the vector is automatically * incremented when its size becomes greater than its capacity. If * the capacity increment is less than or equal to zero, the capacity * of the vector is doubled each time it needs to grow. * * @serial */ protected int capacityIncrement;

3.重要的方法

/**

* Returns a capacity at least as large as the given minimum capacity.

* Will not return a capacity greater than MAX_ARRAY_SIZE unless

* the given minimum capacity is greater than MAX_ARRAY_SIZE.

*

* @param minCapacity the desired minimum capacity

* @throws OutOfMemoryError if minCapacity is less than zero

*/

private int newCapacity(int minCapacity) {

// overflow-conscious code

int oldCapacity = elementData.length;

int newCapacity = oldCapacity + ((capacityIncrement > 0) ?

capacityIncrement : oldCapacity);

if (newCapacity - minCapacity <= 0) {

if (minCapacity < 0) // overflow

throw new OutOfMemoryError();

return minCapacity;

}

return (newCapacity - MAX_ARRAY_SIZE <= 0)

? newCapacity

: hugeCapacity(minCapacity);

}

4.Vector与ArrayList比较

- Vector与ArrayList类似,但是Vector使用了synchronized进行同步。

- 由于Vector时同步的,因此其开销要比ArrayList更大,访问速度更慢。最好使用ArrayList而不是Vector,因为同步操作完全可以由程序员自己来控制。

- Vector每次扩容的空间是由capacityIncrement决定的,要么为oldCapacity+capacityIncrement,要么为原先的两倍,ArrayList为原先的1.5倍。

5.替代方案

- 在ArrayList部分中提到,可以使用Collections.synchronizedList()得到一个线程安全的ArrayList。

- 可以使用concurrent并发包下的CopyOnWriteArrayList类。

三、CopyOnWriteArrayList

public class CopyOnWriteArrayList<E>

implements List<E>, RandomAccess, Cloneable, java.io.Serializable

CopyOnWriteArrayList主要实现了List、RandomAccess接口。

ArrayList线程安全的版本,所有的修改操作通过底层数组的一个拷贝来实现。

这通常会造成很大的开销,但是当便利操作远超于修改操作时,通常会更有效。并且当你不能或者不想同步遍历这将会很有用,你需要排除并发带来的干扰。“快照”风格的迭代器方法在迭代器创建时,使用数组状态的一个引用。在迭代器的生命周期中,数组不会被改变,因此不会存在干扰且迭代器保证不会抛出ConcurrentModificationException。迭代器被创建之后,不会反映对链表的增、删、改。迭代器自身的元素改变操作remove()、set()不被支持。这些方法会抛出UnsupportOperationException。

所有的元素都被允许,包括null。

存储一致性效果:和其他并发集合一样,向CopyOnWriteArrayList放置一个元素的线程发生在访问或移除这个元素的其他线程之前。

1.重要的域

锁lock(对一些修改操作上锁)

/** * The lock protecting all mutators. (We have a mild preference * for builtin monitors over ReentrantLock when either will do.) */ final transient Object lock = new Object();

array

/** The array, accessed only via getArray/setArray. */ private transient volatile Object[] array;

2.重要的方法

修改的操作都进行了锁,防止数据丢失等。

add()方法先getArray()取得数组,然后拷贝一份新数组进行修改,最后将底层数组设置为新数组。

同理,remove(int index)也是不是对原数组进行操作,而是使用一个新的数组。

写操作在新的拷贝上进行,读操作仍然读取原来的数组,互不影响。

public E set(int index, E element) {

synchronized (lock) {

Object[] es = getArray();

E oldValue = elementAt(es, index);

if (oldValue != element) {

es = es.clone();

es[index] = element;

setArray(es);

}

return oldValue;

}

}

public boolean add(E e) {

synchronized (lock) {

Object[] es = getArray();

int len = es.length;

es = Arrays.copyOf(es, len + 1);

es[len] = e;

setArray(es);

return true;

}

}

public void add(int index, E element) {

synchronized (lock) {

Object[] es = getArray();

int len = es.length;

if (index > len || index < 0)

throw new IndexOutOfBoundsException(outOfBounds(index, len));

Object[] newElements;

int numMoved = len - index;

if (numMoved == 0)

newElements = Arrays.copyOf(es, len + 1);

else {

newElements = new Object[len + 1];

System.arraycopy(es, 0, newElements, 0, index);

System.arraycopy(es, index, newElements, index + 1,

numMoved);

}

newElements[index] = element;

setArray(newElements);

}

}

public E remove(int index) {

synchronized (lock) {

Object[] es = getArray();

int len = es.length;

E oldValue = elementAt(es, index);

int numMoved = len - index - 1;

Object[] newElements;

if (numMoved == 0)

newElements = Arrays.copyOf(es, len - 1);

else {

newElements = new Object[len - 1];

System.arraycopy(es, 0, newElements, 0, index);

System.arraycopy(es, index + 1, newElements, index,

numMoved);

}

setArray(newElements);

return oldValue;

}

}

3.适用场景

CopyOnWriteArrayList在写操作的同时允许读操作,大大提交了读操作的性能,因此很适合读多写少的应用场景。

但是CopyOnWriteArrayList有其缺陷:

- 内存占用:在写操作时需要复制一个新的数组,使得内存占用为原来的两倍左右。

- 数据不一致:读操作不能读取实时性的数据,因为部分写操作的数据还未同步到数组中。

所以CopyOnWriteArrayList不适合内存敏感以及对实时性要求很高的场景。

四、LinkedList

public class LinkedList<E>

extends AbstractSequentialList<E>

implements List<E>, Deque<E>, Cloneable, java.io.Serializable

LinkedList主要实现了List、Deque(双向链表)接口。

List和Deque接口的双向链表实现。实现了所有链表的操作,允许所有类型包括null。

所有的操作表现为一个双向链表所预期的那样。索引链表的操作将从开始或结尾开始遍历链表,这取决于开始和结尾哪个更靠近要索引的节点。

注意LinkedList不是同步的。如果在并发访问下,并且至少有一个会结构化的修改链表,则必须在外部进行同步。关于结构化修改和之前所述一致。

LinkedList也可以使用Collections.synchronizedList(new LinkedList(...));来进行封装,使得LinkedList支持并发访问。

LinkedList的迭代器也遵循Fail-fast机制。

1.重要的域

transient Node

first; transient Node

last; /** * Pointer to first node. / transient Node

first; / * * Pointer to last node. */ transient Nodelast;

2.重要的方法

LinkedList方法较为简单,主要是对双向链表进行操作。

/**

* Links e as first element.

*/

private void linkFirst(E e) {

final Node<E> f = first;

final Node<E> newNode = new Node<>(null, e, f);

first = newNode;

if (f == null)

last = newNode;

else

f.prev = newNode;

size++;

modCount++;

}

/**

* Links e as last element.

*/

void linkLast(E e) {

final Node<E> l = last;

final Node<E> newNode = new Node<>(l, e, null);

last = newNode;

if (l == null)

first = newNode;

else

l.next = newNode;

size++;

modCount++;

}

/**

* Inserts element e before non-null Node succ.

*/

void linkBefore(E e, Node<E> succ) {

// assert succ != null;

final Node<E> pred = succ.prev;

final Node<E> newNode = new Node<>(pred, e, succ);

succ.prev = newNode;

if (pred == null)

first = newNode;

else

pred.next = newNode;

size++;

modCount++;

}

/**

* Unlinks non-null first node f.

*/

private E unlinkFirst(Node<E> f) {

// assert f == first && f != null;

final E element = f.item;

final Node<E> next = f.next;

f.item = null;

f.next = null; // help GC

first = next;

if (next == null)

last = null;

else

next.prev = null;

size--;

modCount++;

return element;

}

/**

* Unlinks non-null last node l.

*/

private E unlinkLast(Node<E> l) {

// assert l == last && l != null;

final E element = l.item;

final Node<E> prev = l.prev;

l.item = null;

l.prev = null; // help GC

last = prev;

if (prev == null)

first = null;

else

prev.next = null;

size--;

modCount++;

return element;

}

/**

* Unlinks non-null node x.

*/

E unlink(Node<E> x) {

// assert x != null;

final E element = x.item;

final Node<E> next = x.next;

final Node<E> prev = x.prev;

if (prev == null) {

first = next;

} else {

prev.next = next;

x.prev = null;

}

if (next == null) {

last = prev;

} else {

next.prev = prev;

x.next = null;

}

x.item = null;

size--;

modCount++;

return element;

}

View Code

/**

* Returns the (non-null) Node at the specified element index.

*/

Node<E> node(int index) {

// assert isElementIndex(index);

if (index < (size >> 1)) {

Node<E> x = first;

for (int i = 0; i < index; i++)

x = x.next;

return x;

} else {

Node<E> x = last;

for (int i = size - 1; i > index; i--)

x = x.prev;

return x;

}

}

View Code

3.LinkedList和ArrayList的比较

- ArrayList底层基于动态扩容的数组实现,LinkedList基于双向链表实现。

- ArrayList支持随机访问,LinkedList不支持。

- LinkedList在任意位置添加删除元素更快。

这些区别主要是基于数组和链表的特点。

五、HashMap

public class HashMap<K,V> extends AbstractMap<K,V>

implements Map<K,V>, Cloneable, Serializable

HashMap主要实现了Map接口。

Map接口基于HashTable的实现。HashMap提供了所有的map操作,允许null value和null key。HashMap类大致等同于HashTable类,除了HashMap是异步的且允许null。这个类不保证map的顺序;特别的,它不保证顺序随着时间的推移会保持不变。

在哈希函数使元素正确分散的前提下,HashMap对于get()、put()操作提供恒定时间的表现(constant-time performance)。集合视图的迭代需要与HashMap的capacity(bucket的数量)及size(键值对的数量)成正比的时间。因此,如果迭代性能很重要的话,不要把初始容量设的太高(或者加载因子设的太低)使很重要的。

HashMap实例有两个参数影响其性能:

- 初始容量

- 加载因子

容量(capacity)是HashTable的桶(bucket)的数量,初始容量在创建时HashTable的容量。

加载因子(load factor)是衡量在capacity扩容之前,HashTable允许被填装的多满。

当HashTable中键值对个数超过加载因子和当前容量的乘积时,HashTable将会被rehash(即内部数据结构将重建),HashTable的桶将会扩大为近似两倍的桶。

一般来说,默认加载因子(0.75)提供了对时间和空间较好的平衡。加载因子更高会减少空间开销但会提升查找开销(对照HashMap类大多数操作,包括get()、put())。在初始化容量的时候,预期的键值对数量和加载因子需要被考虑,来最小化rehash的次数。如果初始化容量大于键值对最大值除以加载因子,就不会发生rehash操作。

如果HashMap实例要存储较多的键值对,用一个足够大的容量来创建它将使得键值对更有效率的被存储,相比于让它自动rehash来增长。注意使用具有相同hashCode()的键会降低一些hash table的性能。为了改善影响,当键是可比较的,这个类将使用比较顺序来打破这种关系。

注意,HashMap也不是同步的。可以使用Collections.synchronizedMap(new HashMap(...))来进行异步访问。

1.构造函数

/**

* Constructs an empty {@code HashMap} with the specified initial

* capacity and load factor.

*

* @param initialCapacity the initial capacity

* @param loadFactor the load factor

* @throws IllegalArgumentException if the initial capacity is negative

* or the load factor is nonpositive

*/

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

this.threshold = tableSizeFor(initialCapacity);

}

/**

* Constructs an empty {@code HashMap} with the specified initial

* capacity and the default load factor (0.75).

*

* @param initialCapacity the initial capacity.

* @throws IllegalArgumentException if the initial capacity is negative.

*/

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

/**

* Constructs an empty {@code HashMap} with the default initial capacity

* (16) and the default load factor (0.75).

*/

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR; // all other fields defaulted

}

/**

* Constructs a new {@code HashMap} with the same mappings as the

* specified {@code Map}. The {@code HashMap} is created with

* default load factor (0.75) and an initial capacity sufficient to

* hold the mappings in the specified {@code Map}.

*

* @param m the map whose mappings are to be placed in this map

* @throws NullPointerException if the specified map is null

*/

public HashMap(Map<? extends K, ? extends V> m) {

this.loadFactor = DEFAULT_LOAD_FACTOR;

putMapEntries(m, false);

}

2.Node节点

/**

* Basic hash bin node, used for most entries. (See below for

* TreeNode subclass, and in LinkedHashMap for its Entry subclass.)

*/

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

V value;

Node<K,V> next;

Node(int hash, K key, V value, Node<K,V> next) {

this.hash = hash;

this.key = key;

this.value = value;

this.next = next;

}

public final K getKey() { return key; }

public final V getValue() { return value; }

public final String toString() { return key + "=" + value; }

public final int hashCode() {

return Objects.hashCode(key) ^ Objects.hashCode(value);

}

public final V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

public final boolean equals(Object o) {

if (o == this)

return true;

if (o instanceof Map.Entry) {

Map.Entry<?,?> e = (Map.Entry<?,?>)o;

if (Objects.equals(key, e.getKey()) &&

Objects.equals(value, e.getValue()))

return true;

}

return false;

}

}

3.put操作

需要注意的是,从jdk1.8开始,新节点是插入在链表的尾部,此前是插在头部。

putVal(...)

/** * Implements Map.put and related methods. * * @param hash hash for key * @param key the key * @param value the value to put * @param onlyIfAbsent if true, don't change existing value * @param evict if false, the table is in creation mode. * @return previous value, or null if none */ final V putVal(int hash, K key, V value, boolean onlyIfAbsent, boolean evict) { HashMap.Node<K,V>[] tab; HashMap.Node<K,V> p; int n, i; //若table为null或者大小为0,则初始化表 if ((tab = table) == null || (n = tab.length) == 0) n = (tab = resize()).length; //如果对应的桶为null if ((p = tab[i = (n - 1) & hash]) == null) tab[i] = newNode(hash, key, value, null); //若不为null else { HashMap.Node<K,V> e; K k; //如果为同一个key,则取得节点 if (p.hash == hash && ((k = p.key) == key || (key != null && key.equals(k)))) e = p; //若为TreeNode else if (p instanceof HashMap.TreeNode) e = ((HashMap.TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value); //否则遍历链表 else { for (int binCount = 0; ; ++binCount) { //如果遍历到结尾还没找到相同的key,则将新node插入到链表尾部 if ((e = p.next) == null) { p.next = newNode(hash, key, value, null); if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st treeifyBin(tab, hash); break; } if (e.hash == hash && ((k = e.key) == key || (key != null && key.equals(k)))) break; p = e; } } //e可能要被覆盖的节点的位置,如果插入到链表尾,e为null if (e != null) { // existing mapping for key V oldValue = e.value; if (!onlyIfAbsent || oldValue == null) e.value = value; afterNodeAccess(e); return oldValue; } } ++modCount; if (++size > threshold) resize(); afterNodeInsertion(evict); return null; }

4.确定桶下标

HashMap中有许多操作要先确定一个key对应的桶的下表

- 计算key的hash值

这里对h和h>>>16进行异或,而不用key的hashCode(),是因为由于HashMap的key的hash值最终是要和capacity进行与运算,当capacity值不大时,与运算实际有效的位数较少,这样key.hashCode()得到的hash值有很大一部分没有用上,散列到table上冲突的概率增大,(h = key.hashCode()) ^ (h >>> 16)将hash值的低16位变为低16和高16的异或,原来的高16位不变,这样可以减少散列冲突。

至于 >> 是指右移,>>>是指无符号右移。

具体解释看这里,膜拜大佬。

static final int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

- 取模

如果x为2的次方,则 (y%x) 等于 (y & x-1)。

令x = 1 << 4,即x为2的4次方:

x : 00010000

x - 1 : 00001111

令一个数y与x-1做与运算,可以去除y的第4位以上的数:

y : 10110010

x - 1 : 00001111

y&(x-1) : 00000010

得到的结果与y对x取模的结果是一样的。

由于位运算的代价比求模运算小的多,因此在进行求模运算的时候用位运算替代能带来更大的性能。

确定桶下标的最后一步是将key的hash值对桶个数取模:hash%capacity,如果能保证capacity为2的n次方,那么就可以将取模的操作转换为位运算。

在上一节中,putVal()方法也使用了位运算来计算桶下标。

5.动态扩容

设 HashMap 的 table 长度为 M,需要存储的键值对数量为 N,如果哈希函数满足均匀性的要求,那么每条链表的长度大约为 N/M,因此平均查找次数的复杂度为 O(N/M)。

为了让查找的成本降低,应该尽可能使得 N/M 尽可能小,因此需要保证 M 尽可能大,也就是说 table 要尽可能大。但如果M太大的话,会造成空间上的浪费,因此需要在时间和空间的开销上找到平衡。HashMap 采用动态扩容来根据当前的 N 值来调整 M 值,使得空间效率和时间效率都能得到保证。

和扩容相关的参数主要有:capacity、size、threshold 和 loadFactor。

参数

含义

capacity

table 的容量大小,默认为 16。需要注意的是 capacity 必须保证为 2 的 n 次方。

size

键值对数量。

threshold

size 的临界值,当 size 大于等于 threshold 就必须进行扩容操作。

loadFactor

装载因子,table 能够使用的比例,threshold = capacity * loadFactor。

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4;

static final int MAXIMUM_CAPACITY = 1 << 30;

static final float DEFAULT_LOAD_FACTOR = 0.75f;

transient Node<K,V>[] table;

transient int size;

int threshold;

final float loadFactor;

扩容使用resize()方法实现:

/**

* Initializes or doubles table size. If null, allocates in

* accord with initial capacity target held in field threshold.

* Otherwise, because we are using power-of-two expansion, the

* elements from each bin must either stay at same index, or move

* with a power of two offset in the new table.

* 初始化或者将table大小变为两倍。

* 如果table为null,则根据threshold字段保存的初始容量来分配。

* 如果table不为null,我们将用二次幂来扩展,每个桶的元素必须在相同索引的位置,或者在新表中

* 移动二次幂的偏移。

*

* @return the table

*/

final HashMap.Node<K,V>[] resize() {

HashMap.Node<K,V>[] oldTab = table;

int oldCap = (oldTab == null) ? 0 : oldTab.length;

int oldThr = threshold;

int newCap, newThr = 0;

//如果原来容量大于0,则分配为原来的两倍

if (oldCap > 0) {

if (oldCap >= MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return oldTab;

}

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // double threshold

}

//如果原来容量为0,原来的阈值不为0,则为第一次初始化table,新的容量为原来的阈值

//使用带参的构造函数走的是这个分支

else if (oldThr > 0) // initial capacity was placed in threshold

newCap = oldThr;

//如果原来的容量为0,原来阈值也为0,则用默认值来初始化新的容量和阈值

//使用HashMap不带参的构造函数走的就是这个分支

else { // zero initial threshold signifies using defaults

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

//第二个分支没有设置到newThr,在这里重新计算

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

//使用新的容量定义新的table

@SuppressWarnings({"rawtypes","unchecked"})

HashMap.Node<K,V>[] newTab = (HashMap.Node<K,V>[])new HashMap.Node[newCap];

table = newTab;

//遍历旧表,将旧表中元素移到新表中

if (oldTab != null) {

for (int j = 0; j < oldCap; ++j) {

HashMap.Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

//若当前桶只有一个元素,则直接进行rehash

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

else if (e instanceof HashMap.TreeNode)

((HashMap.TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

HashMap.Node<K,V> loHead = null, loTail = null;

HashMap.Node<K,V> hiHead = null, hiTail = null;

HashMap.Node<K,V> next;

do {

next = e.next;

//如果哈希值和oldCap做与运算为0,则在新表中还在原位

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

//若与运算不为0,则在新表中的位置为原索引+oldCap,可以举例验证

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

5.1扩容-重新计算桶下标

重新计算桶下标的逻辑也在resize()方法中

//如果哈希值和oldCap做与运算为0,则在新表中还在原位

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

//若与运算不为0,则在新表中的位置为原索引+oldCap

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

i,f (loTail != null) { loTail.next = null; newTab[j] = loHead;}if (hiTail != null) { hiTail.next = null; newTab[j + oldCap] = hiHead;}

在进行扩容时,需要把键值对重新放到对应的桶上。HashMap 使用了一个特殊的机制,可以降低重新计算桶下标的操作。

假设原数组长度 capacity 为 16,扩容之后 new capacity 为 32:

capacity : 00010000

new capacity : 00100000

对于一个 Key,

- 它的哈希值如果在第 5 位上为 0,那么取模得到的结果和之前一样;

- 如果为 1,那么得到的结果为原来的结果 +16。

6.计算数组容量

HashMap要求容量capacity为2的n次方,构造函数允许用户传入的容量不是2的n次方,因为它可以自动地将传入的容量转换为2的n次方。

/**

* Returns a power of two size for the given target capacity.

*/

static final int tableSizeFor(int cap) {

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

具体请看这里。

7.链表转红黑树

从 JDK 1.8 开始,一个桶存储的链表长度大于 8 时会将链表转换为红黑树。

8.HashMap与HashTable比较

- HashTable使用synchronized来进行同步。

- HashMap可以插入键为null的Entry。

- HashMap的迭代器是Fail-fast迭代器。

- HashMap不能保证随着时间的推移Map中的元素次序是不变的。

六、ConcurrentHashMap

public class ConcurrentHashMap<K,V> extends AbstractMap<K,V>

implements ConcurrentMap<K,V>, Serializable

支持检索的全并发和更新的高预期并发的hash table。ConcurrentHashMap遵循与Hashtable相同的功能,包括Hashtable的每个方法的版本。然而,即使所有的操作是线程安全的,检索操作也不意味着上锁,ConcurrentHashMap对于对整个表上锁来保护所有的访问没有任何的支持。在依赖线程安全但不依赖于它的同步细节的程序中,ConcurrentHashMap完全与Hashtable互操作。

检索操作(包括get)通常不加锁,所以可能会和更新操作(包括put、remove)重叠。检索反应大多数近期完成的更新操作的结果(更加正式的说,检索更新的值只与在这之前发生的更新操作有关)。对于聚合操作(putAll、clear),并发检索可能只反映一部分插入和移除。相似的,迭代器、分割器、枚举只反映它们创建时hashtable的状态。它们不会抛出ConcurrentModificationException。然而迭代器被设计只用来一个时间只在一个线程中使用。记住聚合状态方法(size、isEmpty、containsValue)的结果只有当map不会在其他线程中并发更新才有用。否则这些方法的结果反应的短暂状态只适用于监视或者估计,而不适用于程序控制。

当有许多冲突时,ConcurrentHashMap会自动扩张(有不同hashcode的key却掉进相同的插槽)。

1.put操作

/**

* Maps the specified key to the specified value in this table.

* Neither the key nor the value can be null.

*

* <p>The value can be retrieved by calling the {@code get} method

* with a key that is equal to the original key.

*

* @param key key with which the specified value is to be associated

* @param value value to be associated with the specified key

* @return the previous value associated with {@code key}, or

* {@code null} if there was no mapping for {@code key}

* @throws NullPointerException if the specified key or value is null

*/

public V put(K key, V value) {

return putVal(key, value, false);

}

/** Implementation for put and putIfAbsent */

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

//获取key的hash

int hash = spread(key.hashCode());

//记录链表长度

int binCount = 0;

for (ConcurrentHashMap.Node<K,V>[] tab = table;;) {

ConcurrentHashMap.Node<K,V> f; int n, i, fh;

//如果还未初始化table

if (tab == null || (n = tab.length) == 0)

tab = initTable();

//如果对应的桶为空

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

//用CAS操作插入节点,如果失败则重试

if (casTabAt(tab, i, null,

new ConcurrentHashMap.Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

//如果在扩容

else if ((fh = f.hash) == MOVED)

//帮助数据迁移

tab = helpTransfer(tab, f);

//如果table已经初始化完毕,且当前桶已经有数据了,且不在扩容。f为桶中的头节点

else {

V oldVal = null;

//对头节点加锁

synchronized (f) {

if (tabAt(tab, i) == f) {

//头节点hash值大于等于0,说明是链表

if (fh >= 0) {

binCount = 1;

for (ConcurrentHashMap.Node<K,V> e = f;; ++binCount) {

K ek;

//如果发现了相等的key则覆盖

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

ConcurrentHashMap.Node<K,V> pred = e;

//如果遍历到结尾,则将新值插入到链表尾

if ((e = e.next) == null) {

pred.next = new ConcurrentHashMap.Node<K,V>(hash, key,

value, null);

break;

}

}

}

//红黑树

else if (f instanceof ConcurrentHashMap.TreeBin) {

ConcurrentHashMap.Node<K,V> p;

binCount = 2;

if ((p = ((ConcurrentHashMap.TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

if (binCount != 0) {

//如果binCount大于阈值

if (binCount >= TREEIFY_THRESHOLD)

//这个方法和HashMap有点不同,它不是一定会进行红黑树转化

//如果当前数组的长度小于64,则会选择进行扩容

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

addCount(1L, binCount);

return null;

}

2.initTable

/**

* Initializes table, using the size recorded in sizeCtl.

*/

private final Node<K,V>[] initTable() {

Node<K,V>[] tab; int sc;

while ((tab = table) == null || tab.length == 0) {

//已有其他的线程在初始化或扩容

if ((sc = sizeCtl) < 0)

Thread.yield(); // lost initialization race; just spin

//CAS一下,将sizeCtl设置为-1,代表抢到了锁

else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if ((tab = table) == null || tab.length == 0) {

//初始化数组,长度为16或为构造函数传入的长度

int n = (sc > 0) ? sc : DEFAULT_CAPACITY;

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

//初始化table

table = tab = nt;

//0.75 * n

sc = n - (n >>> 2);

}

} finally {

//设置sizeCtl为sc,默认情况下是12

sizeCtl = sc;

}

break;

}

}

return tab;

}

3.treeifyBin

当链表长度大于给定值时,将链表转化为红黑树,但如果table的长度未达到MIN_TREEIFY_CAPACITY时,尝试扩容而不是树化。

/**

* Replaces all linked nodes in bin at given index unless table is

* too small, in which case resizes instead.

*/

private final void treeifyBin(Node<K,V>[] tab, int index) {

Node<K,V> b; int n, sc;

if (tab != null) {

//当table长度小于MIN_TREEIFY_CAPACITY时,尝试扩容

if ((n = tab.length) < MIN_TREEIFY_CAPACITY)

tryPresize(n << 1);

//否则对链表进行红黑树转化

else if ((b = tabAt(tab, index)) != null && b.hash >= 0) {

synchronized (b) {

if (tabAt(tab, index) == b) {

TreeNode<K,V> hd = null, tl = null;

for (Node<K,V> e = b; e != null; e = e.next) {

TreeNode<K,V> p =

new TreeNode<K,V>(e.hash, e.key, e.val,

null, null);

if ((p.prev = tl) == null)

hd = p;

else

tl.next = p;

tl = p;

}

setTabAt(tab, index, new TreeBin<K,V>(hd));

}

}

}

}

}

4.tryPresize

尝试扩容table来容纳给定的元素数量

/**

* Tries to presize table to accommodate the given number of elements.

*

* @param size number of elements (doesn't need to be perfectly accurate)

*/

private final void tryPresize(int size) {

int c = (size >= (MAXIMUM_CAPACITY >>> 1)) ? MAXIMUM_CAPACITY :

tableSizeFor(size + (size >>> 1) + 1);

int sc;

//当sizeCtl大于0,代表下次扩容的大小

while ((sc = sizeCtl) >= 0) {

Node<K,V>[] tab = table; int n;

//初始化代码?

if (tab == null || (n = tab.length) == 0) {

n = (sc > c) ? sc : c;

if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if (table == tab) {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = nt;

sc = n - (n >>> 2);

}

} finally {

sizeCtl = sc;

}

}

}

//若扩容的大小还没有达到下次扩容的阈值或者已经大于MAXIMUM_CAPACITY

else if (c <= sc || n >= MAXIMUM_CAPACITY)

break;

//进行扩容和数据迁移

else if (tab == table) {

int rs = resizeStamp(n);

if (sc < 0) {

Node<K,V>[] nt;

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||

transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))

transfer(tab, nt);

}

else if (U.compareAndSwapInt(this, SIZECTL, sc,

(rs << RESIZE_STAMP_SHIFT) + 2))

//传入空值,在transfer中进行初始化,将table扩容为两倍大

transfer(tab, null);

}

}

}

5.transfer

/**

* Moves and/or copies the nodes in each bin to new table. See

* above for explanation.

*/

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

int n = tab.length, stride;

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

if (nextTab == null) { // initiating

try {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTable = nextTab;

transferIndex = n;

}

int nextn = nextTab.length;

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

boolean advance = true;

boolean finishing = false; // to ensure sweep before committing nextTab

for (int i = 0, bound = 0;;) {

Node<K,V> f; int fh;

while (advance) {

int nextIndex, nextBound;

if (--i >= bound || finishing)

advance = false;

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

if (finishing) {

nextTable = null;

table = nextTab;

sizeCtl = (n << 1) - (n >>> 1);

return;

}

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

finishing = advance = true;

i = n; // recheck before commit

}

}

else if ((f = tabAt(tab, i)) == null)

advance = casTabAt(tab, i, null, fwd);

else if ((fh = f.hash) == MOVED)

advance = true; // already processed

else {

synchronized (f) {

if (tabAt(tab, i) == f) {

Node<K,V> ln, hn;

if (fh >= 0) {

int runBit = fh & n;

Node<K,V> lastRun = f;

for (Node<K,V> p = f.next; p != null; p = p.next) {

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

if (runBit == 0) {

ln = lastRun;

hn = null;

}

else {

hn = lastRun;

ln = null;

}

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

else if (f instanceof TreeBin) {

TreeBin<K,V> t = (TreeBin<K,V>)f;

TreeNode<K,V> lo = null, loTail = null;

TreeNode<K,V> hi = null, hiTail = null;

int lc = 0, hc = 0;

for (Node<K,V> e = t.first; e != null; e = e.next) {

int h = e.hash;

TreeNode<K,V> p = new TreeNode<K,V>

(h, e.key, e.val, null, null);

if ((h & n) == 0) {

if ((p.prev = loTail) == null)

lo = p;

else

loTail.next = p;

loTail = p;

++lc;

}

else {

if ((p.prev = hiTail) == null)

hi = p;

else

hiTail.next = p;

hiTail = p;

++hc;

}

}

ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :

(hc != 0) ? new TreeBin<K,V>(lo) : t;

hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :

(lc != 0) ? new TreeBin<K,V>(hi) : t;

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

}

}

}

}

}

七、LinkedHashMap

public class LinkedHashMap<K,V>

extends HashMap<K,V>

implements Map<K,V>

LinkedHashMap继承于HashMap,内部维护了一个双向链表,用来维护插入顺序或者LRU顺序。

/**

* The head (eldest) of the doubly linked list.

*/

transient LinkedHashMap.Entry<K,V> head;

/**

* The tail (youngest) of the doubly linked list.

*/

transient LinkedHashMap.Entry<K,V> tail;

1.向链表中追加新节点

当向LinkedHashMap中插入新节点时,linkNodeLast()实现了向双向链表中增加新节点,它在newNode()中被调用。

// link at the end of list

private void linkNodeLast(LinkedHashMap.Entry<K,V> p) {

LinkedHashMap.Entry<K,V> last = tail;

tail = p;

if (last == null)

head = p;

else {

p.before = last;

last.after = p;

}

}

Node<K,V> newNode(int hash, K key, V value, Node<K,V> e) {

LinkedHashMap.Entry<K,V> p =

new LinkedHashMap.Entry<K,V>(hash, key, value, e);

linkNodeLast(p);

return p;

}

2.从链表中删除节点

当从LinkedListMap移除节点时,afterNodeRemoval()实现从双向链表中移除节点。

void afterNodeRemoval(Node<K,V> e) { // unlink

LinkedHashMap.Entry<K,V> p =

(LinkedHashMap.Entry<K,V>)e, b = p.before, a = p.after;

p.before = p.after = null;

if (b == null)

head = a;

else

b.after = a;

if (a == null)

tail = b;

else

a.before = b;

}

3.accessOrder

accessOrder决定了LinkedHashMap双向链表所维护的顺序,默认为false,此时维护的是插入顺序,当为true时,维护的是访问顺序(当访问了LinkedHashMap的某个节点,则节点被刷新为youngest)。

4.访问顺序

afterNodeAccess()

当一个节点被访问时,如果 accessOrder 为 true,则会将该节点移到链表尾部。也就是说指定为 LRU 顺序之后,在每次访问一个节点时,会将这个节点移到链表尾部,保证链表尾部是最近访问的节点,那么链表首部就是最近最久未使用的节点。

void afterNodeAccess(Node<K,V> e) { // move node to last

LinkedHashMap.Entry<K,V> last;

if (accessOrder && (last = tail) != e) {

LinkedHashMap.Entry<K,V> p =

(LinkedHashMap.Entry<K,V>)e, b = p.before, a = p.after;

p.after = null;

if (b == null)

head = a;

else

b.after = a;

if (a != null)

a.before = b;

else

last = b;

if (last == null)

head = p;

else {

p.before = last;

last.after = p;

}

tail = p;

++modCount;

}

}

afterNodeInsertion()

在 put 等操作之后执行,当 removeEldestEntry() 方法返回 true 时会移除最晚的节点,也就是链表首部节点 first。

evict 只有在构建 Map 的时候才为 false,在这里为 true。

void afterNodeInsertion(boolean evict) { // possibly remove eldest

LinkedHashMap.Entry<K,V> first;

if (evict && (first = head) != null && removeEldestEntry(first)) {

K key = first.key;

removeNode(hash(key), key, null, false, true);

}

}

removeEldestEntry() 默认为 false,如果需要让它为 true,需要继承 LinkedHashMap 并且覆盖这个方法的实现,这在实现 LRU 的缓存中特别有用,通过移除最近最久未使用的节点,从而保证缓存空间足够,并且缓存的数据都是热点数据。

protected boolean removeEldestEntry(Map.Entry<K,V> eldest) {

return false;

}

4.LRU 缓存

以下是使用 LinkedHashMap 实现的一个 LRU 缓存:

设定最大缓存空间 MAX_ENTRIES 为 3;

使用 LinkedHashMap 的构造函数将 accessOrder 设置为 true,开启 LRU 顺序;

覆盖 removeEldestEntry() 方法实现,在节点多于 MAX_ENTRIES 就会将最近最久未使用的数据移除。

class LRUCache<K, V> extends LinkedHashMap<K, V> { private static final int MAX_ENTRIES = 3; protected boolean removeEldestEntry(Map.Entry eldest) { return size() > MAX_ENTRIES; } LRUCache() { super(MAX_ENTRIES, 0.75f, true); } } public static void main(String[] args) { LRUCache<Integer, String> cache = new LRUCache<>(); cache.put(1, "a"); cache.put(2, "b"); cache.put(3, "c"); cache.get(1); cache.put(4, "d"); System.out.println(cache.keySet()); }

[3, 1, 4]

八、Hashtable

public class Hashtable<K,V>

extends Dictionary<K,V>

implements Map<K,V>, Cloneable, java.io.Serializable

Hashtable是线程安全的,使用synchronized进行控制。只能使用非空的key或者value。

为了成功的存储和索引hashtable,作为key的对象必须实现hashcode()和equals()。

底层使用Entry数组来存储

/**

* The hash table data.

*/

private transient Entry<?,?>[] table;

两个重要参数:threshold和loadFactor

1.put操作

从代码中看出hashtable的key的hash值使用的是key.hashCode(),如果key只存在则替换,若不存在则新增。

/**

* Maps the specified <code>key</code> to the specified

* <code>value</code> in this hashtable. Neither the key nor the

* value can be <code>null</code>. <p>

*

* The value can be retrieved by calling the <code>get</code> method

* with a key that is equal to the original key.

*

* @param key the hashtable key

* @param value the value

* @return the previous value of the specified key in this hashtable,

* or <code>null</code> if it did not have one

* @exception NullPointerException if the key or value is

* <code>null</code>

* @see Object#equals(Object)

* @see #get(Object)

*/

public synchronized V put(K key, V value) {

// Make sure the value is not null

if (value == null) {

throw new NullPointerException();

}

// Makes sure the key is not already in the hashtable.

Entry<?,?> tab[] = table;

int hash = key.hashCode();

int index = (hash & 0x7FFFFFFF) % tab.length;

@SuppressWarnings("unchecked")

Entry<K,V> entry = (Entry<K,V>)tab[index];

for(; entry != null ; entry = entry.next) {

if ((entry.hash == hash) && entry.key.equals(key)) {

V old = entry.value;

entry.value = value;

return old;

}

}

addEntry(hash, key, value, index);

return null;

}

private void addEntry(int hash, K key, V value, int index) {

modCount++;

Entry<?,?> tab[] = table;

if (count >= threshold) {

// Rehash the table if the threshold is exceeded

rehash();

tab = table;

hash = key.hashCode();

index = (hash & 0x7FFFFFFF) % tab.length;

}

// Creates the new entry.

@SuppressWarnings("unchecked")

Entry<K,V> e = (Entry<K,V>) tab[index];

tab[index] = new Entry<>(hash, key, value, e);

count++;

}

容量扩大为原来的两倍+1

protected void rehash() {

int oldCapacity = table.length;

Entry<?,?>[] oldMap = table;

// overflow-conscious code

int newCapacity = (oldCapacity << 1) + 1;

if (newCapacity - MAX_ARRAY_SIZE > 0) {

if (oldCapacity == MAX_ARRAY_SIZE)

// Keep running with MAX_ARRAY_SIZE buckets

return;

newCapacity = MAX_ARRAY_SIZE;

}

Entry<?,?>[] newMap = new Entry<?,?>[newCapacity];

modCount++;

threshold = (int)Math.min(newCapacity * loadFactor, MAX_ARRAY_SIZE + 1);

table = newMap;

for (int i = oldCapacity ; i-- > 0 ;) {

for (Entry<K,V> old = (Entry<K,V>)oldMap[i] ; old != null ; ) {

Entry<K,V> e = old;

old = old.next;

int index = (e.hash & 0x7FFFFFFF) % newCapacity;

e.next = (Entry<K,V>)newMap[index];

newMap[index] = e;

}

}

}

2.remove操作

public synchronized V remove(Object key) {

Entry<?,?> tab[] = table;

int hash = key.hashCode();

int index = (hash & 0x7FFFFFFF) % tab.length;

@SuppressWarnings("unchecked")

Entry<K,V> e = (Entry<K,V>)tab[index];

for(Entry<K,V> prev = null ; e != null ; prev = e, e = e.next) {

if ((e.hash == hash) && e.key.equals(key)) {

modCount++;

if (prev != null) {

prev.next = e.next;

} else {

tab[index] = e.next;

}

count--;

V oldValue = e.value;

e.value = null;

return oldValue;

}

}

return null;

}

3.get操作

public synchronized V get(Object key) {

Entry<?,?> tab[] = table;

int hash = key.hashCode();

int index = (hash & 0x7FFFFFFF) % tab.length;

for (Entry<?,?> e = tab[index] ; e != null ; e = e.next) {

if ((e.hash == hash) && e.key.equals(key)) {

return (V)e.value;

}

}

return null;

}

九、TreeMap

public class TreeMap<K,V>

extends AbstractMap<K,V>

implements NavigableMap<K,V>, Cloneable, java.io.Serializable

基于红黑树的NavigableMap的实现,map根据key的Comparale自然顺序排序,或者根据创建时候提供的Comparator,这取决于用什么构造函数创建。

注意TreeMap使用一个树映射来维持排序,类似于其他的排序映射,不管是否有一个显式comparator被提供,只要是要正确的实现Map接口,必须与equals()返回结果一致。

1.put操作

与root值进行比较,大于root则将值插在右子树,小于则插在左子树

public V put(K key, V value) {

Entry<K,V> t = root;

if (t == null) {

compare(key, key); // type (and possibly null) check

root = new Entry<>(key, value, null);

size = 1;

modCount++;

return null;

}

int cmp;

Entry<K,V> parent;

// split comparator and comparable paths

Comparator<? super K> cpr = comparator;

if (cpr != null) {

do {

parent = t;

cmp = cpr.compare(key, t.key);

if (cmp < 0)

t = t.left;

else if (cmp > 0)

t = t.right;

else

return t.setValue(value);

} while (t != null);

}

else {

if (key == null)

throw new NullPointerException();

@SuppressWarnings("unchecked")

Comparable<? super K> k = (Comparable<? super K>) key;

do {

parent = t;

cmp = k.compareTo(t.key);

if (cmp < 0)

t = t.left;

else if (cmp > 0)

t = t.right;

else

return t.setValue(value);

} while (t != null);

}

Entry<K,V> e = new Entry<>(key, value, parent);

if (cmp < 0)

parent.left = e;

else

parent.right = e;

fixAfterInsertion(e);

size++;

modCount++;

return null;

}

2.get操作

和put是同样的原理

final Entry<K,V> getEntry(Object key) {

// Offload comparator-based version for sake of performance

if (comparator != null)

return getEntryUsingComparator(key);

if (key == null)

throw new NullPointerException();

@SuppressWarnings("unchecked")

Comparable<? super K> k = (Comparable<? super K>) key;

Entry<K,V> p = root;

while (p != null) {

int cmp = k.compareTo(p.key);

if (cmp < 0)

p = p.left;

else if (cmp > 0)

p = p.right;

else

return p;

}

return null;

}

3.getFloorEntry

返回小于key的最大节点

final Entry<K,V> getFloorEntry(K key) {

Entry<K,V> p = root;

while (p != null) {

int cmp = compare(key, p.key);

if (cmp > 0) {

if (p.right != null)

p = p.right;

else

return p;

} else if (cmp < 0) {

if (p.left != null) {

p = p.left;

} else {

Entry<K,V> parent = p.parent;

Entry<K,V> ch = p;

while (parent != null && ch == parent.left) {

ch = parent;

parent = parent.parent;

}

return parent;

}

} else

return p;

}

return null;

}

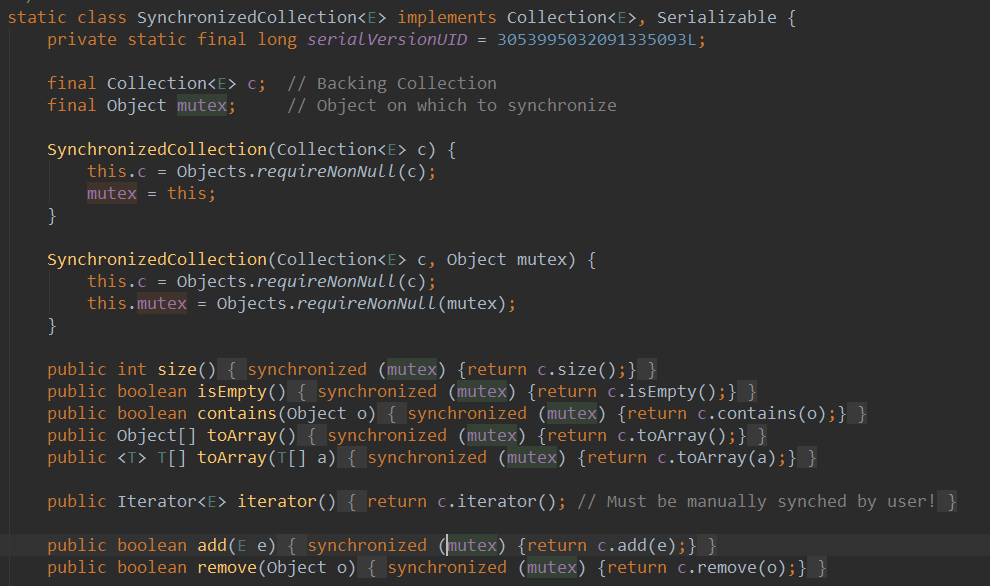

Collections.synchronizedList()

实现原理:不是线程安全的集合,通过Collections.synchronizedList()函数封装一层,内部通过synchronized对一个Object对象进行同步,来实现线程安全。

参考文献: